前几天发了docker方式部署elk7.17集群(启用用户密码和ssl加密通信,超详细,建议收藏)之后,后台收到留言让nginx日志加上geoip并在grafana上展示,今天它来了

- 规范nginx日志格式

1)添加日志格式 * * * * * * * * * * * * * * * * * * * * * *

log_format easyops_json escape=json '{"@timestamp":"$time_iso8601",' '"host":"$hostname",' '"server_ip":"$server_addr",' '"client_ip":"$remote_addr",' '"xff":"$http_x_forwarded_for",' '"domain":"$host",' '"url":"$uri",' '"referer":"$http_referer",' '"args":"$args",' '"upstreamtime":"$upstream_response_time",' '"responsetime":"$request_time",' '"request_method":"$request_method",' '"status":"$status",' '"size":"$body_bytes_sent",' '"request_body":"$request_body",' '"request_length":"$request_length",' '"protocol":"$server_protocol",' '"upstreamhost":"$upstream_addr",' '"file_dir":"$request_filename",' '"http_user_agent":"$http_user_agent"' '}';

字段解释:

{"@timestamp":"$time_iso8601":记录日志的时间,以 ISO8601 格式表示。

"host":"$hostname":服务器的主机名。

"server_ip":"$server_addr":服务器的 IP 地址。

"client_ip":"$remote_addr":客户端(请求端)的 IP 地址。

"xff":"$http_x_forwarded_for":客户端通过代理服务器访问时的真实 IP 地址。

"domain":"$host":请求的域名。

"url":"$uri":请求的 URI 。

"referer":"$http_referer":请求的 referer 头的值。

"args":"$args":请求的参数。

"upstreamtime":"$upstream_response_time":上游服务器的响应时间。

"responsetime":"$request_time":整个请求的处理时间。

"request_method":"$request_method":请求的方法,如 GET、POST 等。

"status":"$status":HTTP 响应状态码。

"size":"$body_bytes_sent":发送给客户端的响应体字节数。

"request_body":"$request_body":请求体的内容。

"request_length":"$request_length":请求的长度。

"protocol":"$server_protocol":使用的协议,如 HTTP/1.1 。

"upstreamhost":"$upstream_addr":上游服务器的地址。

"file_dir":"$request_filename":请求的文件名。

"http_user_agent":"$http_user_agent":客户端的用户代理(浏览器等)字符串。

关于 escape=json:在 Nginx 的 log_format 中,escape=json 的作用是对日志字段中的特殊字符进行 JSON 格式的转义处理。

- 当指定了 escape=json 时

它会确保日志字段中的特殊字符(如双引号 " 、反斜杠 \ 、控制字符等)被正确地转义为符合 JSON 格式的字符串。这对于将日志以 JSON 格式输出并被其他 JSON 处理工具解析是很重要的,能够保证 JSON 数据的完整性和正确性。

- 如果不写 escape=json

日志字段中的特殊字符不会进行专门的 JSON 转义处理。这可能导致在将日志作为 JSON 数据处理时出现解析错误,如果日志字段中包含了可能破坏 JSON 格式的特殊字符。

例如,如果日志字段中包含了字符串 "This is a "quoted" string" ,在没有 escape=json 时,直接以 JSON 格式输出可能导致解析错误,而有 escape=json 时会将其正确转义为 "This is a \"quoted\" string" ,符合 JSON 规范。

2)虚拟主机中引用新的日志格式 * * * * * * * * * * * * * * *

server { listen 80; server_name example1.com;

root /var/www/example1; index index.html;

# 为 example1.com 配置的访问日志 access_log /var/log/nginx/example1_access.log easyops_json; error_log /var/log/nginx/example1_error.log;

location / { try_files $uri $uri/ =404; }}

- 配置filebeat

$ cat /etc/filebeat/filebeat.yml |egrep -v "#|^$"name: "192.168.31.79"

tags: ["192.168.31.79","nginx"]

filebeat.inputs:- type: log id: centos79 enabled: true paths: - /data/nginx/logs/*_access.log - /data/nginx/logs/*_error.log fields: env: test nginx_log_type: access log_topic: nginx-logs #将字段直接放置在文档的根级别,而不是将它们嵌套在一个特定的字段(如 fields )中 fields_under_root: true json.keys_under_root: true json.overwrite_keys: true json.add_error_key: true

- type: log id: centos79 enabled: true paths: - /data/nginx/logs/*_error.log fields: env: test nginx_log_type: error log_topic: nginx-logs #将字段直接放置在文档的根级别,而不是将它们嵌套在一个特定的字段(如 fields )中 fields_under_root: true json.keys_under_root: true json.overwrite_keys: true json.add_error_key: true

# 没有新日志采集后多长时间关闭文件句柄,默认5分钟,设置成1分钟,加快文件句柄关闭close_inactive: 1m

# 传输了3h后没有传输完成的话就强行关闭文件句柄,这个配置项是解决以上案例问题的key pointclose_timeout: 3h

# 这个配置项也应该配置上,默认值是0表示不清理,不清理的意思是采集过的文件描述在registry文件里永不清理,在运行一段时间后,registry会变大,可能会带来问题clean_inactive: 72h

# 设置了clean_inactive后就需要设置ignore_older,且要保证ignore_older < clean_inactiveignore_older: 70h

# 限制 CPU和内存资源max_procs: 1 # 限制一个CPU核心,避免过多抢占业务资源queue.mem.events: 512 # 存储于内存队列的事件数,排队发送 (默认4096)queue.mem.flush.min_events: 512 # 小于 queue.mem.events ,增加此值可提高吞吐量 (默认值2048)

filebeat.config.modules: path: ${path.config}/modules.d/*.yml reload.enabled: falsesetup.template.settings: index.number_of_shards: 1

output.kafka: hosts: ["192.168.31.168:9092", "192.168.31.171:9092", "1192.168.31.172:9092"] # 因为前面配置了将字段直接放置在文档的根级别,所以这里直接写字段名就行,不需要写加上fileds了 topic: '%{[log_topic]}' partition.round_robin: reachable_only: false required_acks: 1 compression: gzip max_message_bytes: 1000000

logging.level: infologging.to_files: truelogging.files: path: /var/log/filebeat name: filebeat.log keepfiles: 7 permissions: 0644 rotateeverybytes: 104857600

processors: - add_host_metadata: when.not.contains.tags: forwarded - add_cloud_metadata: ~ - add_docker_metadata: ~ - add_kubernetes_metadata: ~

- 配置logstash

1)因为需要定位来源IP,这里需要用到免费的离线IP地址库GeoLite2-City.mmdb,下载地址:https://github.com/wp-statistics/GeoLite2-City * * * *

$ cd /data/logstash/config/$ wget https://cdn.jsdelivr.net/npm/geolite2-city/GeoLite2-City.mmdb.gz$ yum install gzip -y$ gzip -d GeoLite2-City.mmdb.gz

2)挂载离线数据库到logstash * * * * * * * * * * * * * * * * * * * * * * * * * * *

$ cat docker-compose.yamlversion: "3"services: logstash: image: docker.elastic.co/logstash/logstash:7.17.22 container_name: logstash # ports: # - 9600:9600 # - 5044:5044 networks: - net-logstash volumes: - $PWD/config/pipelines.yml:/usr/share/logstash/config/pipelines.yml - $PWD/config/logstash.yml:/usr/share/logstash/config/logstash.yml - $PWD/logs:/usr/share/logstash/logs - $PWD/data:/usr/share/logstash/data - $PWD/config/ca.crt:/usr/share/logstash/config/ca.crt - $PWD/pipelines/pipeline_from_kafka:/usr/share/logstash/pipeline_from_kafka - $PWD/config/GeoLite2-City.mmdb:/usr/share/logstash/GeoLite2-City.mmdb environment: - TZ=Asia/Shanghai - LS_JAVA_OPTS=-Xmx1g -Xms1gnetworks: net-logstash: external: false$ docker compose down$ docker compose up -d

3)配置pipeline * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

input { kafka { #type => "logs-easyops-kafka" # kafka集群地址 bootstrap_servers => '192.168.31.168:9092,192.168.31.171:9092,192.168.31.172:9092' # 设置分组 group_id => 'logstash-dev' # 多个客户端同时消费需要设置不同的client_id,注意同一分组的客户端数量≤kafka分区数量 client_id => 'logstash-168' # 消费线程数 consumer_threads => 5 # 正则匹配topic #topics_pattern => "elk_.*" # 指定具体的topic topics => [ "nexus","system-logs", "nginx-logs"] #默认为false,只有为true的时候才会获取到元数据 decorate_events => true #从最早的偏移量开始消费 auto_offset_reset => 'earliest' #auto_offset_reset => "latest" #提交时间间隔 auto_commit_interval_ms => 1000 enable_auto_commit => true codec => json { charset => "UTF-8" } }}

filter { if [@metadata][kafka][topic] == "nginx-logs" { # nginx 日志 if [xff] != ""{ geoip { target => "geoip" source => "xff" database => "/usr/share/logstash/GeoLite2-City.mmdb" add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ] add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ] # 去掉显示 geoip 显示的多余信息 remove_field => ["[geoip][latitude]", "[geoip][longitude]", "[geoip][country_code]", "[geoip][country_code2]", "[geoip][country_code3]", "[geoip][timezone]", "[geoip][continent_code]", "[geoip][region_code]"] } } else { geoip { target => "geoip" source => "client_ip" database => "/usr/share/logstash/GeoLite2-City.mmdb" add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ] add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ] # 去掉显示 geoip 显示的多余信息 remove_field => ["[geoip][latitude]", "[geoip][longitude]", "[geoip][country_code]", "[geoip][country_code2]", "[geoip][country_code3]", "[geoip][timezone]", "[geoip][continent_code]", "[geoip][region_code]"] } }

mutate { convert => [ "size", "integer" ] convert => [ "status", "integer" ] convert => [ "responsetime", "float" ] convert => [ "upstreamtime", "float" ] convert => [ "[geoip][coordinates]", "float" ] # 过滤 filebeat 没用的字段,这里过滤的字段要考虑好输出到es的,否则过滤了就没法做判断 # remove_field => [ "ecs","agent","host","cloud","@version","input","logs_type" ] remove_field => [ "ecs","agent","cloud","@version","input" ] } # 根据 http_user_agent来自动处理区分用户客户端系统与版本 useragent { source => "http_user_agent" target => "ua" # 过滤useragent没用的字段 remove_field => [ "[ua][minor]","[ua][major]","[ua][build]","[ua][patch]","[ua][os_minor]","[ua][os_major]" ] } }}

output { if [@metadata][kafka][topic] == "nginx-logs" { elasticsearch { hosts => ["https://192.168.31.168:9200","https://192.168.31.171:9200","https://192.168.31.172:9200"] ilm_enabled => false user => "elastic" password => "123456" cacert => "/usr/share/logstash/config/ca.crt" ssl => true ssl_certificate_verification => false index => "logstash-nginx-logs-%{+YYYY.MM.dd}" } } else { stdout { } }}

- es中查询结果

注:只有只有公网IP才能获得位置信息

- 部署grafana

1)编写docker-compose.yaml * * * * * * * * * * * * * * * * * * * * * * *

version: "3"services: grafana: image: grafana/grafana-oss:9.5.18 container_name: "grafana" hostname: grafana restart: unless-stopped ports: - "3000:3000" volumes: - $PWD/grafana/conf:/etc/grafana #配置文件目录 - $PWD/grafana/data:/var/lib/grafana #数据目录 environment: # 服务器访问的URL - GF_SERVER_ROOT_URL=http://grafana.easyops.local networks: - grafana

networks: grafana: driver: "bridge" driver_opts: com.docker.network.enable_ipv6: "false"

2) 启动 *

$ docker composeup -d

3)浏览器访问测试,默认用户名密码:admin/admin,第一次登录需要修改密码

- 添加elasticsearch数据源

参考官网说明:

https://grafana.com/docs/grafana/latest/datasources/elasticsearch/

https://grafana.com/docs/grafana/v9.4/datasources/elasticsearch/

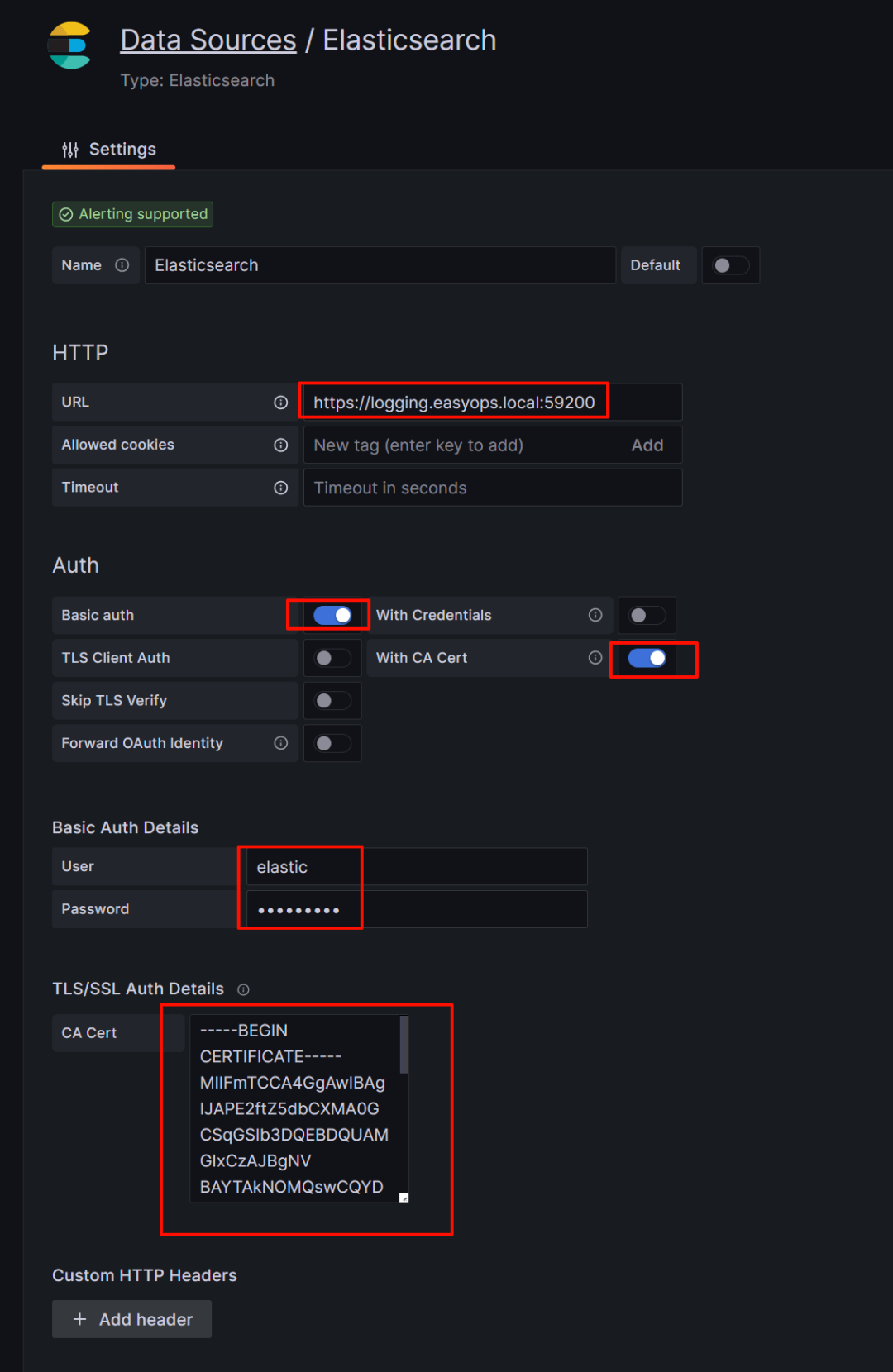

填入es的代理地址和端口:https://logging.easyops.local:59200

启用Basic auth和CA认证,ca证书去es的部署目录下查看: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

# cat /data/elasticsearch/config/certs/http/ca.crt-----BEGIN CERTIFICATE-----MIIFmTCCA4GgAwIBAgIJAPE2ftZ5dbCXMA0GCSqGSIb3DQEBDQUAMGIxCzAJBgNVBAYTAkNOMQswCQYDVQQIDAJKUzELMAkGA1UEBwwCTkoxEDAOBgNVBAoMB2Vhc3lvcHMxDzANBgNVBAsMBmRldm9wczEWMBQGA1UEAwwNZWFzeW9wcy5sb2NhbDAgFw0yNDA1MjkwOTMwMjFaGA8yMTI0MDUwNTA5MzAyMVowYjELMAkGA1UEBhMCQ04xCzAJBgNVBAgMAkpTMQswCQYDVQQHDAJOSjEQMA4GA1UECgwHZWFzeW9wczEPMA0GA1UECwwGZGV2b3BzMRYwFAYDVQQDDA1lYXN5b3BzLmxvY2FsMIICIjANBgkqhkiG9w0BAQEFAAOCAg8AMIICCgKCAgEA3CNTgWVSluAsCycGHYPX+F5g3RS8dFdZdU7tP0xBtmt00lQxVEgTGOO+n3SZHPN2v+9CCYsbfzeYkoNqd3z25YRfVrsVyuxiV0mBWGKuXV3fDjCfTA+9lZYvTByO1MKXieXrPD19h+VnFW9Llj3/3+XO8L9B1SiozHtel6ygYbAqcmwfV3JWjZg8DbDLU68vJnwFXZRg8gLbI2icTEr3FtOGEUIdH/PNYcNrCc+RX1WVelJJsErEwZR2N4aR8bEOkhqwOZhC2uDphh8W3JefHji/Y98juAyrMjNb15i54P/Pk6lbgFFT+X6WZnHSLmvVVxrLJFLi/tAhy/4gonU1gHG+Yoo6FV/+jVwSYtIC4xcYvocF1y2ON9JOcpJTzXQWQggQ4ItOJGKJgSeCFp/WC+sn6vVdL+L/C/+zkdPs9ojrOVYsoMKqOFwHtWPW+EdOoXH5reLJwQj57v1UnQEkn0K+9/hB3rQ/iLjTGnAQ7cCQntGfLZsZl0T0rznIlYORrBRmflUrG3RsyE6ZB9KegDZ/UYmvb72/hsaJTExAP5S/T8pjVikC1iKE5n5nZnPesmOZ9KiqREcDfKo6fafey+XStrP//WlNgRYWyKIMOtv5zw8iNcxBQJTttOpRvDpbyQfyfxN7jiPguLdFs/sB05es+xd/E/vV5chYAE32958CAwEAAaNQME4wHQYDVR0OBBYEFLOCbFM0ujixHEXDnp4S3h5TnourMB8GA1UdIwQYMBaAFLOCbFM0ujixHEXDnp4S3h5TnourMAwGA1UdEwQFMAMBAf8wDQYJKoZIhvcNAQENBQADggIBAGF9/DUeU7MqlSS2iKnKMVJwSRKsJRe2jIW5IYYuxpvuyzRASMF0b3a6AP5hxM7lmlu7xvgxM7k0Cz59ujDySA6YIpqWFq1FvPeNnFQIcrfDxg4T7sjgvuYA6eE5xSobnQGx3g1Urlk5d2a1WF3lEMmqstbn0kVdmUaaK0EsCUjWY+oEKo5EEaBpJpsJS7h34g5nV8aJTlXlCRd6SyIdoDikA26m41grpGWG0syruoc5eZIOiXwIMygn9WQ2zmNFFFOYuvvp0DAmhqIkOMxT5x2KdR80cMzO16cbzin03RiaX2NF05CbB47jy8pJpU474Vn9E2VtN6txJtRx6OA5ynwMVzdaZvduc6G0+/h0j/FBwQB40i5E+zzoXWXwjNfRSjPeXcCmqQdvw15yFOwZiaHchznjG2HRKy3fnH3FEg5pG4cC2gHzoHbRZY6Ts2ICZtzZOH+aa+5SaFk8eVFCf453ys1yQmoT6Mf0uP792DjwgGIOYbLdumzhnO9EscCsUx25JCa+RazzjKsGVG80rWXyHoZ1n96P+Bqr0T7kaBfC1U7EhNgMmNLu3i3QzVv6K9pUvKdhFX+QiDeds8+d6MC9uZ3WLWTwfUUUoy1tTgm725qUF0gW3Lygnkv7Siuk4uWaRO6z+cPfIPaFrw3ZNICzeddmm5vQlMJ7YFoG4d9D-----END CERTIFICATE-----

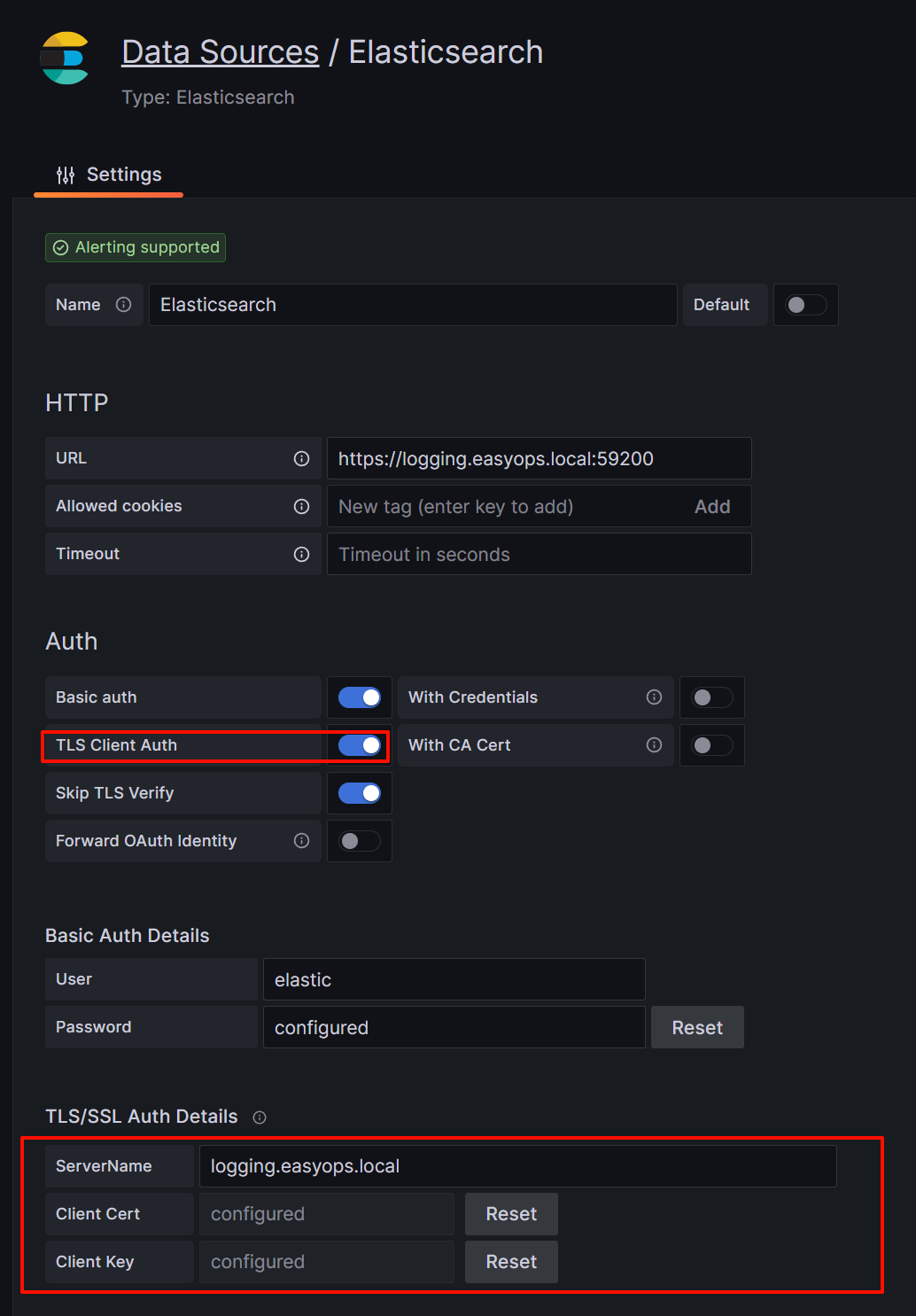

也可以启用TLS Client Auth,然后将crt和key添加进去:

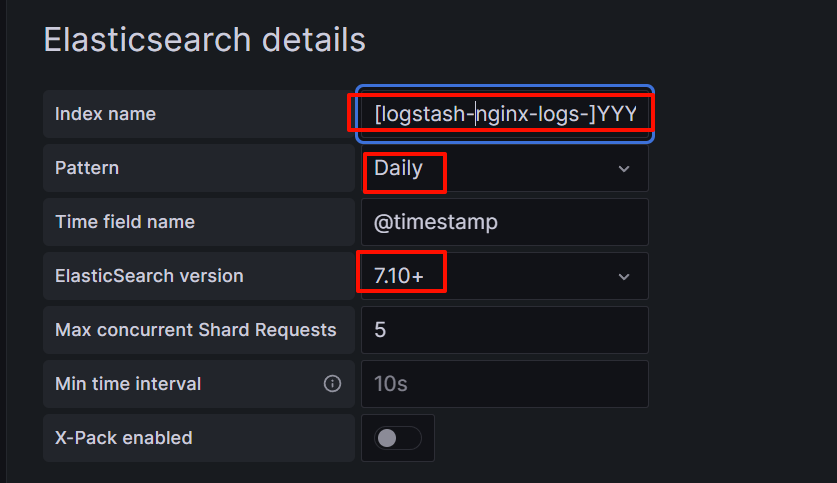

输入索引值,时间戳,选择版本7.10+:

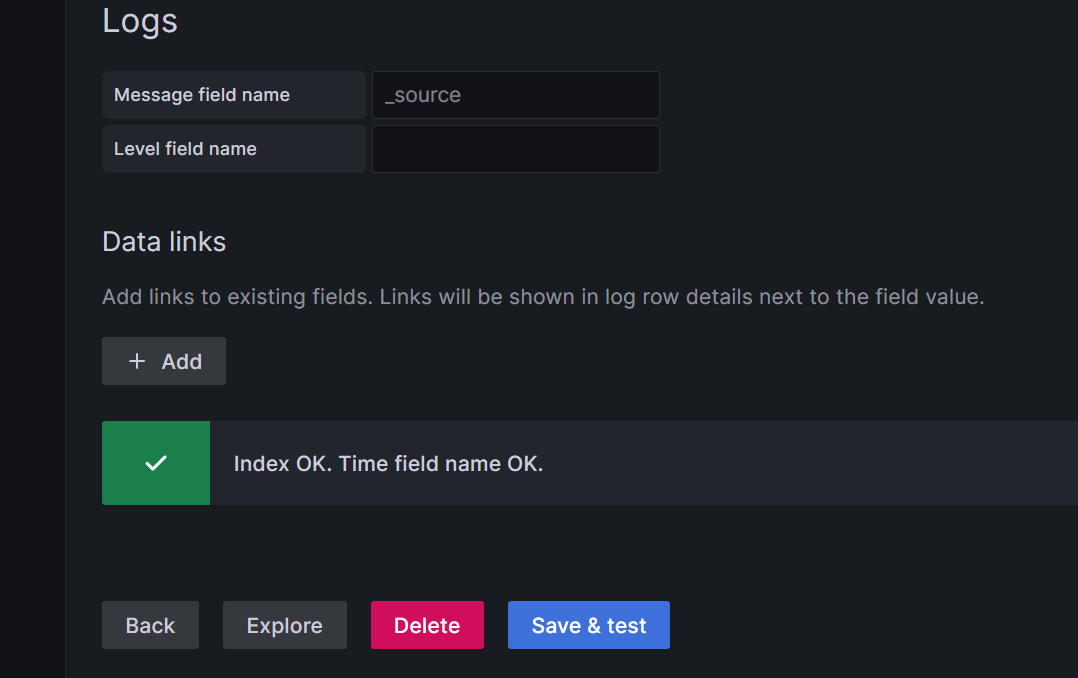

填好后添加save and test:

如果连不上检查es的配置中是否开启允许跨域配置: * * *

# 允许跨域http.cors.enabled: truehttp.cors.allow-origin: "*"

如果想通过配置文件添加可以按照下面的方式添加: * * * * * * * * * * * * * * * * * *

apiVersion: 1

datasources: - name: elasticsearch-v7-filebeat type: elasticsearch access: proxy url: http://localhost:9200 jsonData: index: '[filebeat-]YYYY.MM.DD' interval: Daily timeField: '@timestamp' logMessageField: message logLevelField: fields.level dataLinks: - datasourceUid: my_jaeger_uid # Target UID needs to be known field: traceID url: '$${__value.raw}' # Careful about the double "$$" because of env var expansion

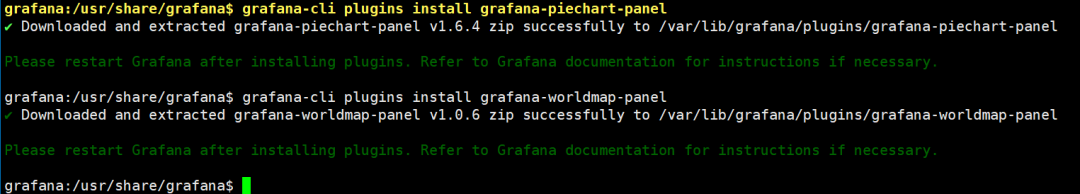

- 进入grafana容器,安装2个插件,用来支持展示图表

$ grafana-cli plugins install grafana-piechart-panel$ grafana-cli plugins install grafana-worldmap-panel

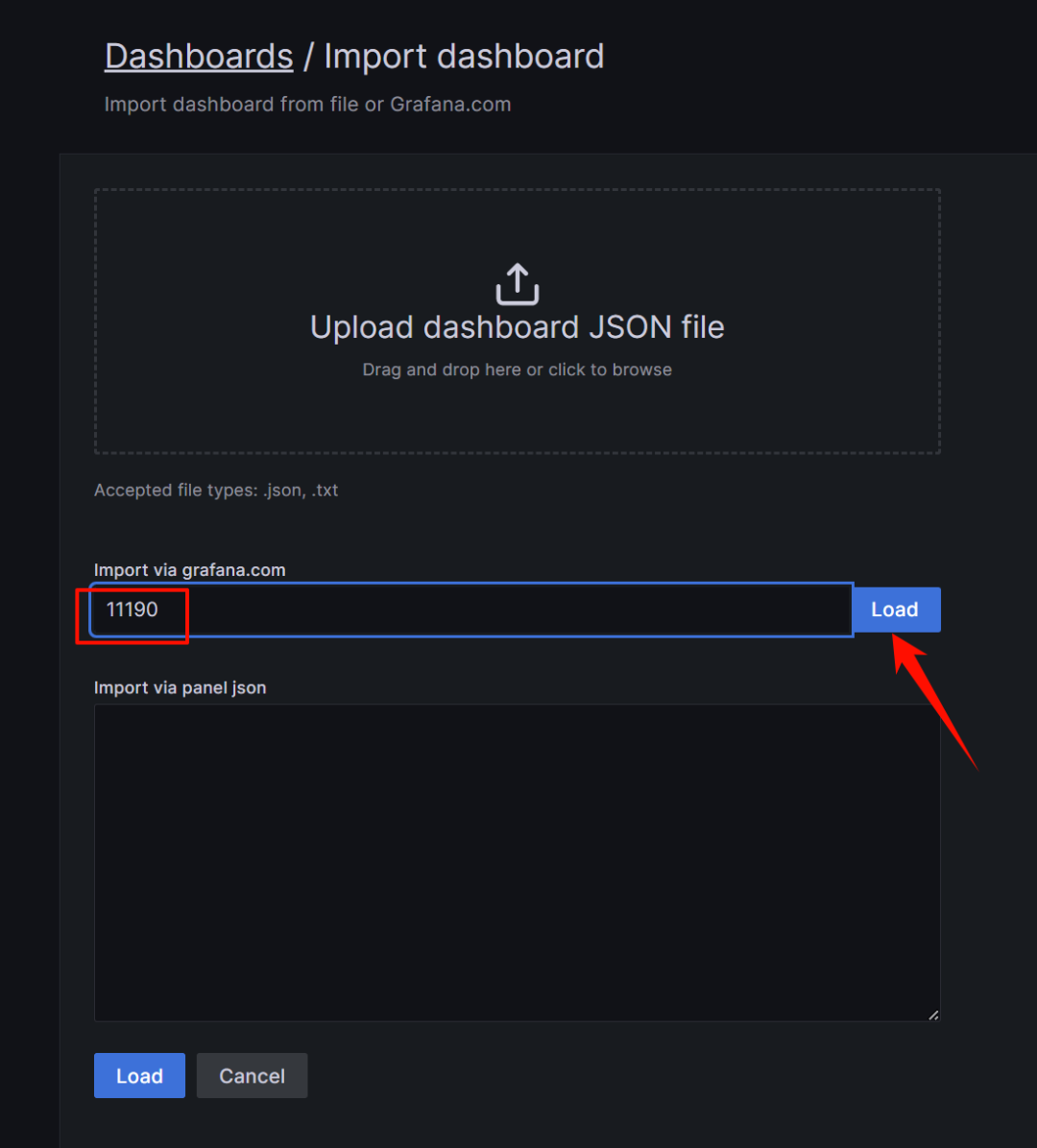

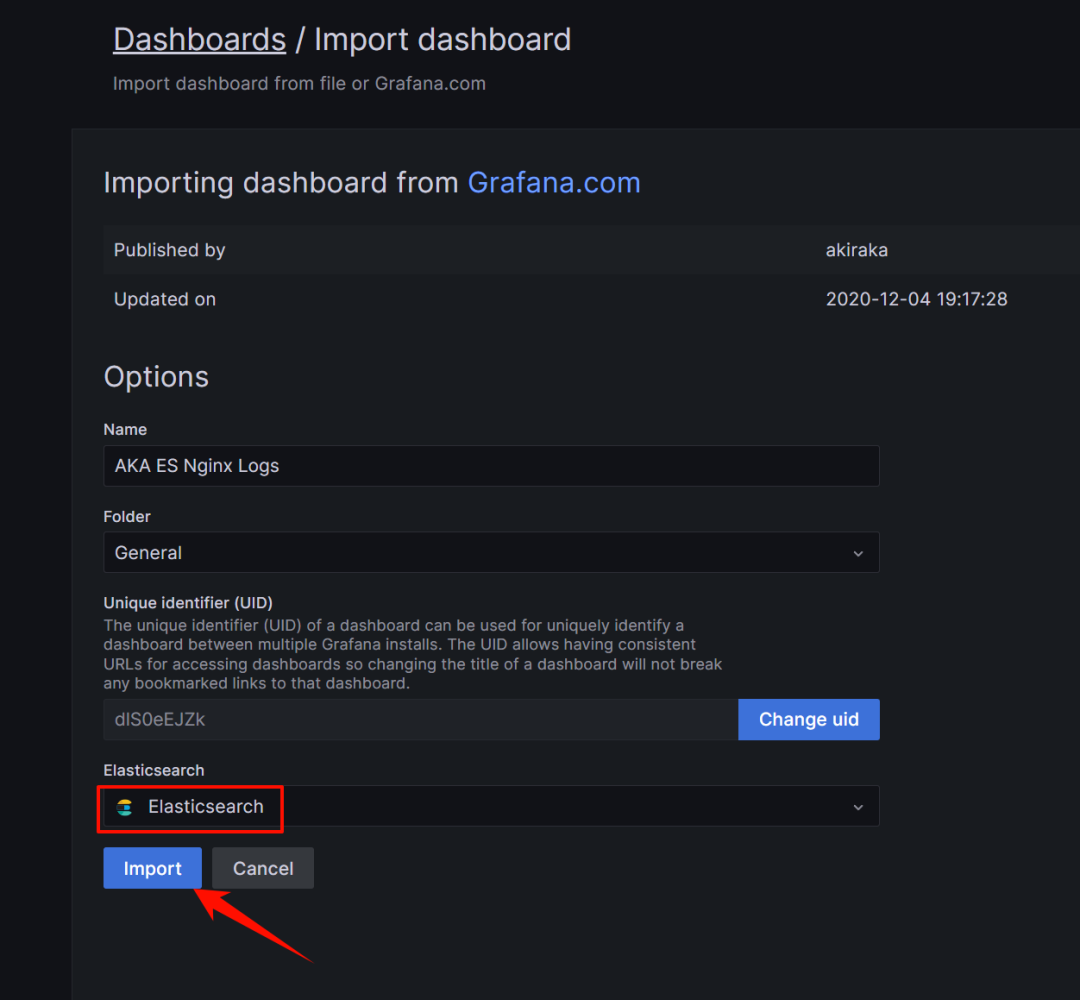

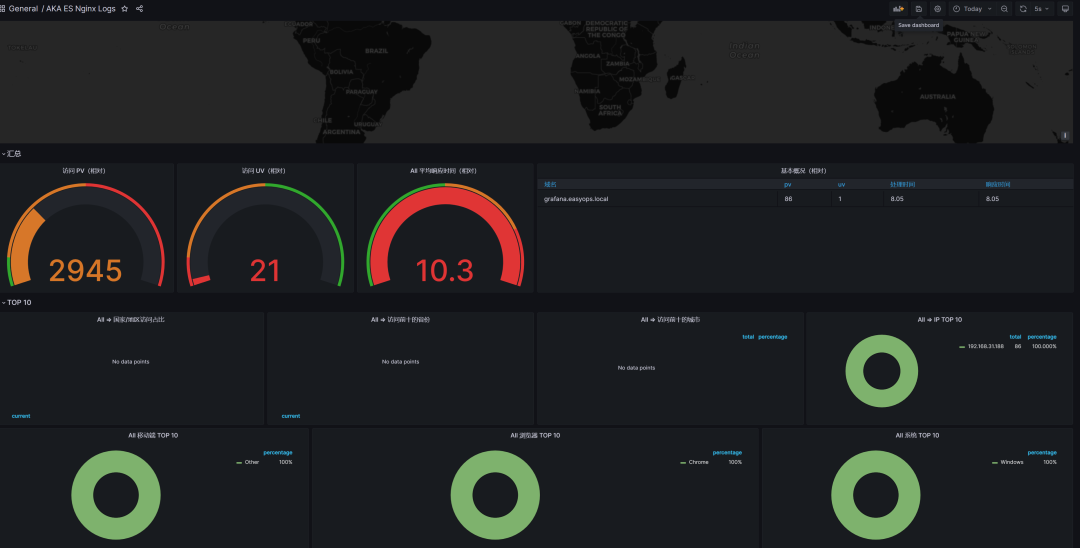

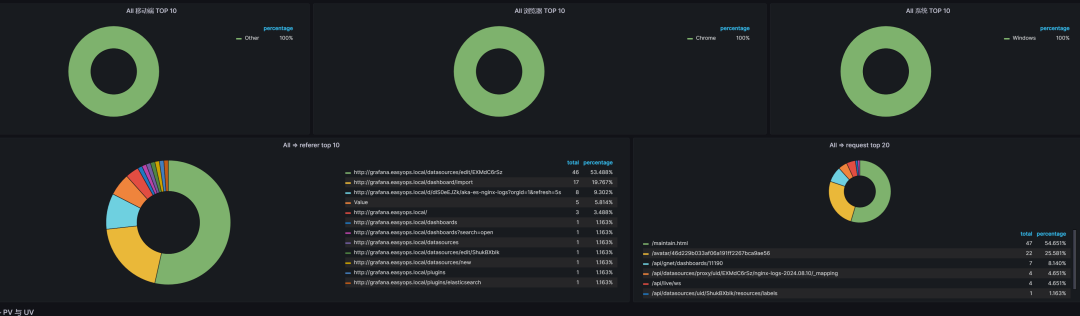

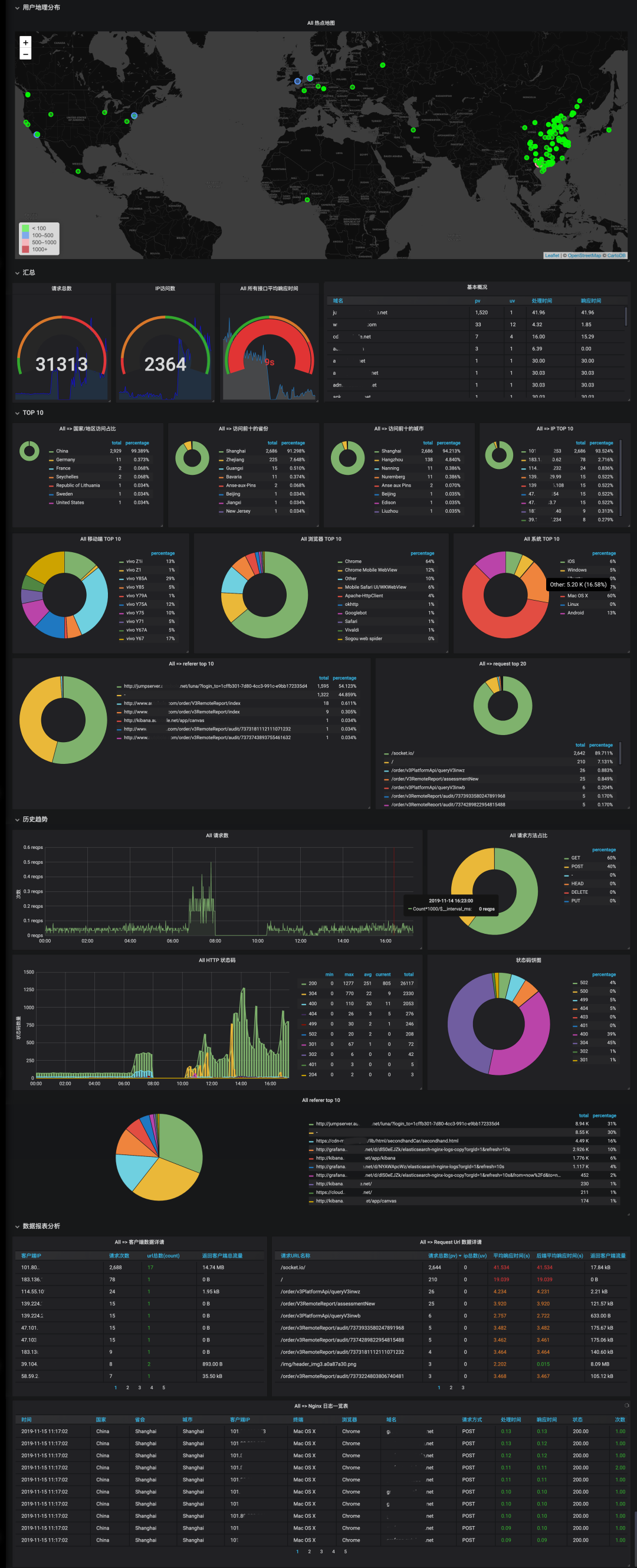

- 导入模板ID :11190

https://grafana.com/grafana/dashboards/11190

官方效果图:自己导入后可能还是需要自己稍微调整下的

好了,今天的分享就到这里了,希望对大家有所帮助。

51工具盒子

51工具盒子