k8s 原生安装(3节点)

#查看集群所有节点

kubectl get nodes

#根据配置文件,给集群创建资源

kubectl apply -f xxxx.yaml

#查看集群部署了哪些应用?

docker ps === kubectl get pods -A

运行中的应用在docker里面叫容器,在k8s里面叫Pod

kubectl get pods -A

准备环境

master: 10.0.0.11

node1: 10.0.0.12

node2: 10.0.0.13

0-基础环境

#各个机器设置自己的域名

hostnamectl set-hostname xxxx

将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.swap./#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

一、 给3个节点安装 docker

yum install -y yum-utils

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6

systemctl enable docker --now

docker info

加速

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

二- 安装 kubelet, kubeadmin, kubectl (3个master 节点都安装)

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

三、使用kubeadm引导集群

1、下载各个机器需要的镜像

我这里是每个节点都运行的, 在/app 目录中

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

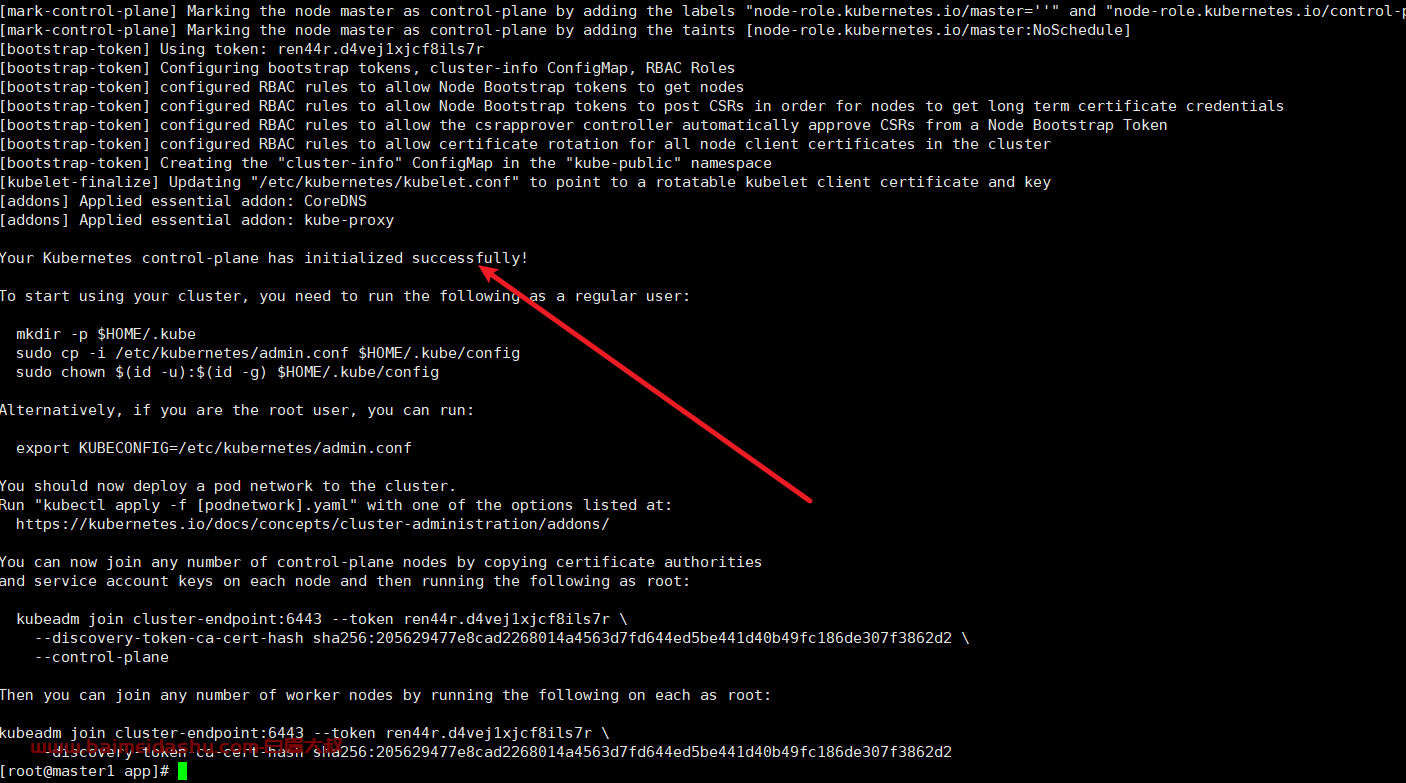

2、初始化主节点

#所有机器添加master域名映射,以下需要修改为自己的

echo "10.0.0.11 cluster-endpoint" >> /etc/hosts

上边的需要每个节点都运行

#主节点初始化 (只需要在 master 节点上运行。

#主节点初始化

kubeadm init \

--apiserver-advertise-address=10.0.0.11 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

#所有网络范围不重叠

看看提示:把这个复制下来,后期有用

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token hums8f.vyx71prsg74ofce7

--discovery-token-ca-cert-hash sha256:a394d059dd51d68bb007a532a037d0a477131480ae95f75840c461e85e2c6ae3

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token hums8f.vyx71prsg74ofce7

--discovery-token-ca-cert-hash sha256:a394d059dd51d68bb007a532a037d0a477131480ae95f75840c461e85e2c6ae3

我们操作如下:

1、设置.kube/config

在master 上

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

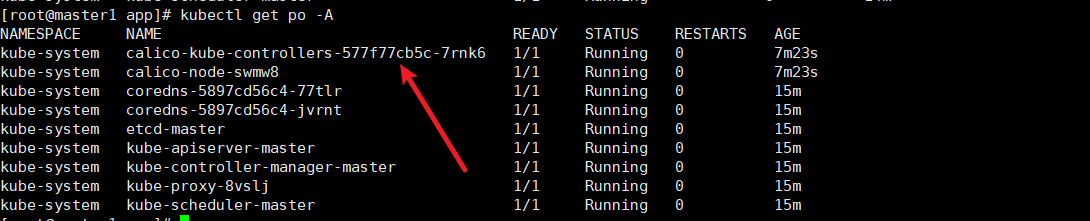

2、安装网络组件 calico

curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O

kubectl apply -f calico.yaml

过一会我们用 kubectl get pods -A 查看 组件是否正常运行

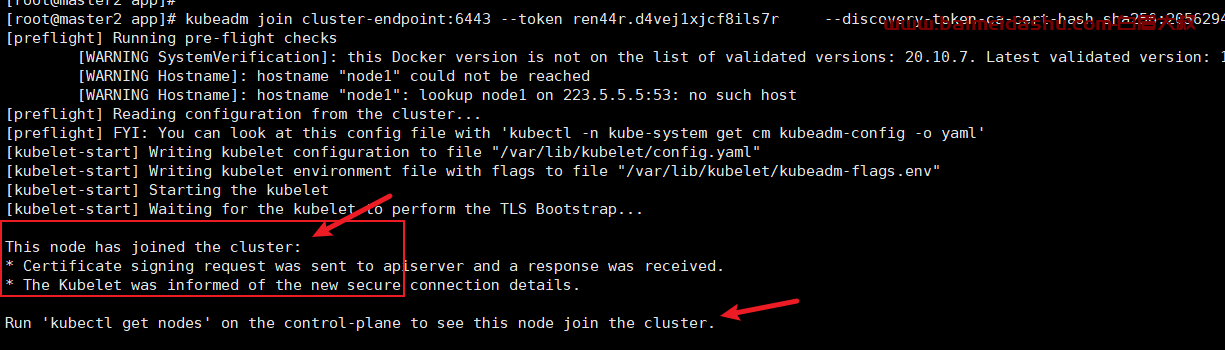

4、加入node节点

高可用部署方式,也是在这一步的时候,使用添加主节点的命令即可{#22697b84d6e909dc971c7d87bcf513eb}

kubeadm join cluster-endpoint:6443 --token ren44r.d4vej1xjcf8ils7r \

--discovery-token-ca-cert-hash sha256:205629477e8cad2268014a4563d7fd644ed5be441d40b49fc186de307f3862d2

这个命令24小时有效, 过期后怎么办? 新加入节点,可以这样重新生成令牌(在master 上)

kubeadm token create --print-join-command

发现有错

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

解决方案:

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

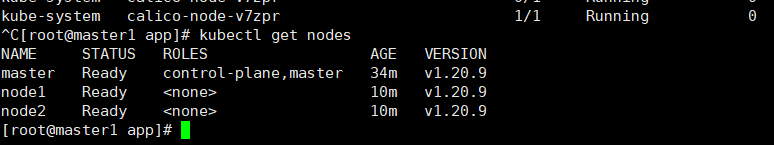

验证集群节点状态

等一会后,我们去主节点查看

kubectl get nodes

三、kubernetes官方提供的可视化界面

https://github.com/kubernetes/dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

2、设置访问端口 {#M6qiZ}

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

把type: ClusterIP 改为 NodePort

kubectl get svc -A |grep kubernetes-dashboard

## 找到端口,在安全组放行

访问: https://集群任意IP:端口 (一定是https){#717830cf956b2c9393828d26d55b1358}

https://10.0.0.11:30261/#/login

这一步需要一个token ,参考第3步

3、创建访问账号

#创建访问账号,准备一个yaml文件; vi dash.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

然后运行

kubectl apply -f dash.yaml

4、令牌访问

#获取访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

然后控制台复制一下token

eyJhbGciOiJSUzI1NiIsImtpZCI6IldpTFZyd3psblJKS2prVE83WnctWXNYZEQ3SEdvdExYLXU1RjFFM0V3WkkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWx6ZGY5Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiYTM4OGUxYS0yODQyLTQ3YzQtYmUwOC05MzJiMGYxZDA4MWQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.UDXcDpYnFFmqm0OHX_ay-PMqll6gNm5_0Z2ZMjNHQQguUUmpoNbTLgZWu2ZiG3L8K4J0TSys3gCChN9asNOgvC4pLvdQZKde8yo64jLJK9IU8Odb_p02eT1AN5EbTwoeYtsbljHaHS_kF6iftvi6B9Op644BSWPcZ8hShUhOM7sYFqwPBBwIULWVPxL453L7u7Ct_oFKBuRPriAicuPbkiMVUuT08K5VopsVDpv7kBKWCEmtDGz6vWdRYYaE4l6BzVvwtRqbdVwSPN0WE3OMrTArOSr5_i7nJa91tzZ96lygUx1GRsxK2r24WXQiaUEEeQyP7cp4UtVItbLqXlfwYQ

51工具盒子

51工具盒子