构建logstash镜像

Dockerfile

FROM logstash:7.17.6

LABEL author="baimeidashu@163.com"

WORKDIR /usr/share/logstash

ADD logstash.yml /usr/share/logstash/config/logstash.yml

ADD logstash.conf /usr/share/logstash/pipeline/logstash.conf

USER root

RUN usermod -a -G root logstash

logstash.yml内容如下:

http.host: "0.0.0.0"

logstash.conf内容如下:

input {

file {

path => "/var/lib/docker/containers/*/*-json.log" #docker

#path => "/var/log/pods/*/*/*.log" #使用containerd时,Pod的log的存放路径

start_position => "beginning"

type => "applog" #日志类型,自定义

}

file {

path => "/var/log/*.log" #操作系统日志路径

start_position => "beginning"

type => "syslog"

}

}

output {

if [type] == "applog" { #指定将applog类型的日志发送到kafka的哪个topic

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节

codec => "${CODEC}" #日志格式

} }

if [type] == "syslog" { ##指定将syslog类型的日志发送到kafka的哪个topic

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}" #系统日志不是json格式

}}

}

执行构建,上传镜像到harbor

docker build -t registry.cn-hangzhou.aliyuncs.com/baimeidashu/logstash-daemonset:7.17.6 .

docker push registry.cn-hangzhou.aliyuncs.com/baimeidashu/logstash-daemonset:7.17.6

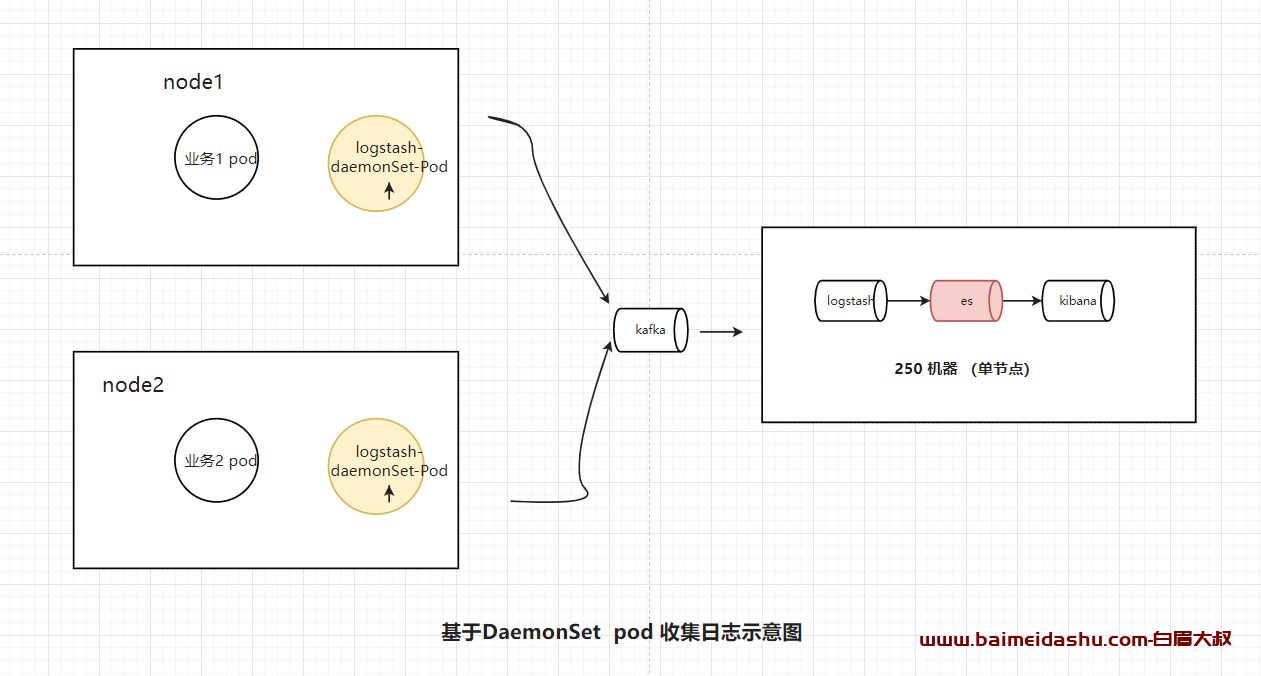

部署logstash daemonset

我是用 kubesphere 界面化操作的, 用的守护进程

部署文件如下

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: logstash-daemonset

namespace: openai

annotations:

deprecated.daemonset.template.generation: '1'

kubesphere.io/creator: project-admin

spec:

selector:

matchLabels:

app: logstash

template:

metadata:

creationTimestamp: null

labels:

app: logstash

annotations:

kubesphere.io/creator: project-admin

spec:

volumes:

- name: varlog

hostPath:

path: /var/log

type: ''

- name: varlogpods

hostPath:

path: /var/log/pods

type: ''

containers:

- name: logstash

image: >-

registry.cn-hangzhou.aliyuncs.com/baimeidashu/logstash-daemonset:7.17.6

env:

- name: KAFKA_SERVER

value: '192.168.1.250:9092'

- name: TOPIC_ID

value: jsonfile-log-topic

- name: CODEC

value: json

resources: {}

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlogpods

mountPath: /var/log/pods

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: Always

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext: {}

schedulerName: default-scheduler

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 0

revisionHistoryLimit: 10

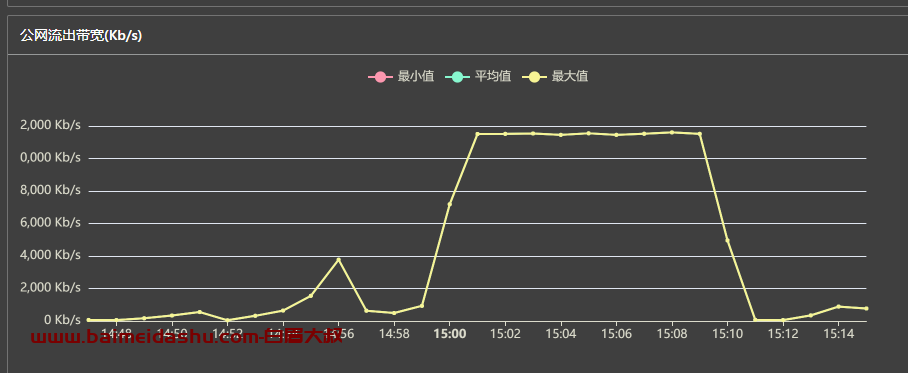

在kafka中进行查看,可以看到日志已经发送到kafka

51工具盒子

51工具盒子