EFK架构采集K8S集群日志

依赖第8天的 PVC

1.导入镜像

[root@master231 efk-images]# for i in `ls -1 *.tar.gz`;do docker load -i $i ; done

[root@master231 efk-images]# docker push harbor.baimei.com/baimei-efk/elasticsearch:7.17.5

[root@master231 efk-images]# docker push harbor.baimei.com/baimei-efk/filebeat:7.10.2

[root@master231 efk-images]# docker push harbor.baimei.com/baimei-efk/kibana:7.17.5

2.部署ES服务

cat 01-deploy-es.yaml

apiVersion: v1

kind: Namespace

metadata:

name: baimei-efk

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

namespace: baimei-efk

labels:

k8s-app: elasticsearch

spec:

replicas: 1

selector:

matchLabels:

k8s-app: elasticsearch

template:

metadata:

labels:

k8s-app: elasticsearch

spec:

containers:

# 指定需要安装的ES版本号

# - image: elasticsearch:7.17.5

- image: harbor.baimei.com/baimei-efk/elasticsearch:7.17.5

name: elasticsearch

resources:

limits:

cpu: 2

memory: 3Gi

requests:

cpu: 0.5

memory: 500Mi

env:

# 配置集群部署模式,此处我由于是实验,配置的是单点

- name: "discovery.type"

value: "single-node"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

ports:

- containerPort: 9200

name: db

protocol: TCP

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

volumes:

- name: elasticsearch-data

persistentVolumeClaim:

claimName: es-pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-pvc

namespace: baimei-efk

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: baimei-efk

spec:

ports:

- port: 9200

protocol: TCP

targetPort: 9200

selector:

k8s-app: elasticsearch

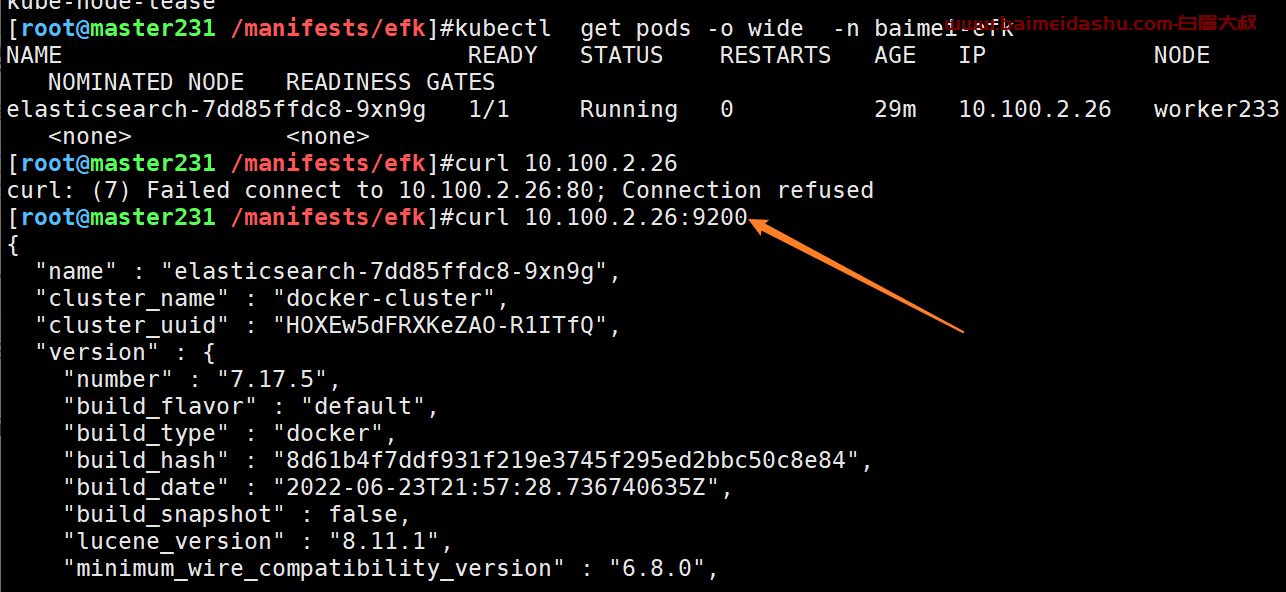

kubectl get pods -o wide -n baimei-efk

3.部署kibana服务

cat 02-deploy-kabana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: baimei-efk

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

# image: kibana:7.17.5

image: harbor.baimei.com/baimei-efk/kibana:7.17.5

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch.baimei-efk.svc.baimei.com:9200

- name: I18N_LOCALE

value: zh-CN

ports:

- containerPort: 5601

name: ui

protocol: TCP

apiVersion: v1

kind: Service

metadata:

name: baimei-kibana

namespace: baimei-efk

spec:

type: NodePort

ports:

- port: 5601

protocol: TCP

targetPort: ui

nodePort: 35601

selector:

k8s-app: kibana

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: baimei-kibana-ing

namespace: baimei-efk

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: kibana.baimei.com

http:

paths:

- backend:

service:

name: baimei-kibana

port:

number: 5601

path: "/"

pathType: "Prefix"

4.部署filebeat

cat 03-ds-filebeat.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: baimei-efk

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

output.elasticsearch:

# hosts: ['elasticsearch.baimei-efk:9200']

# hosts: ['elasticsearch.baimei-efk.svc.baimei.com:9200']

hosts: ['elasticsearch:9200']

# 不建议修改索引,因为索引名称该成功后,pod的数据也将收集不到啦!

# 除非你明确知道自己不收集Pod日志且需要自定义索引名称的情况下,可以打开下面的注释哟~

# index: 'baimei-linux-elk-%{+yyyy.MM.dd}'

# 配置索引模板

# setup.ilm.enabled: false

# setup.template.name: "baimei-linux-elk"

# setup.template.pattern: "baimei-linux-elk*"

# setup.template.overwrite: true

# setup.template.settings:

# index.number_of_shards: 3

# index.number_of_replicas: 0

注意,官方在filebeat 7.2就已经废弃docker类型,建议后期更换为container.

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: baimei-efk

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

- decode_json_fields:

fields: ["message"]

target: ""

overwrite_keys: true

add_error_key: true

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: baimei-efk

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

operator: Exists

# - key: node.kubernetes.io/disk-pressure

# effect: NoSchedule

# operator: Exists

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

# 注意官方的filebeat版本推荐使用"elastic/filebeat:7.10.2",

# 如果高于该版本("elastic/filebeat:7.10.2")可能收集不到K8s集群的Pod相关日志指标哟~

# 经过我测试,直到2022-04-01开源的7.12.2版本依旧没有解决该问题!

# filebeat和ES版本可以不一致哈,因为我测试ES的版本是7.17.2

#

# 待完成: 后续可以尝试更新最新的镜像,并将输入的类型更换为container,因为docker输入类型官方在filebeat 7.2已废弃!

# image: elastic/filebeat:7.10.2

image: harbor.baimei.com/baimei-efk/filebeat:7.10.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

# 出问题后可以用作临时调试,注意需要将args注释哟

# command: ["sleep","3600"]

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: baimei-efk

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: baimei-efk

labels:

k8s-app: filebeat

kubectl apply -f 3ds-filebeat.yaml

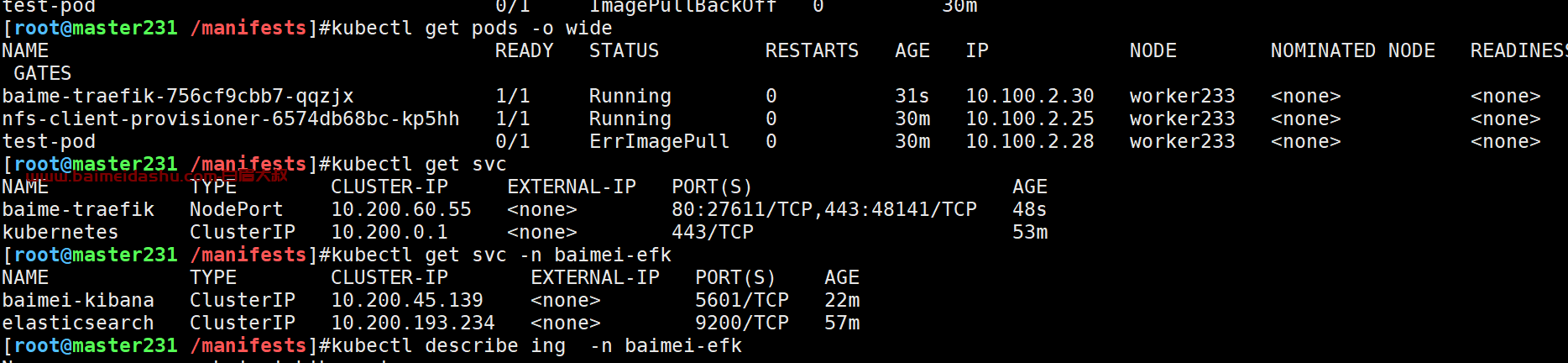

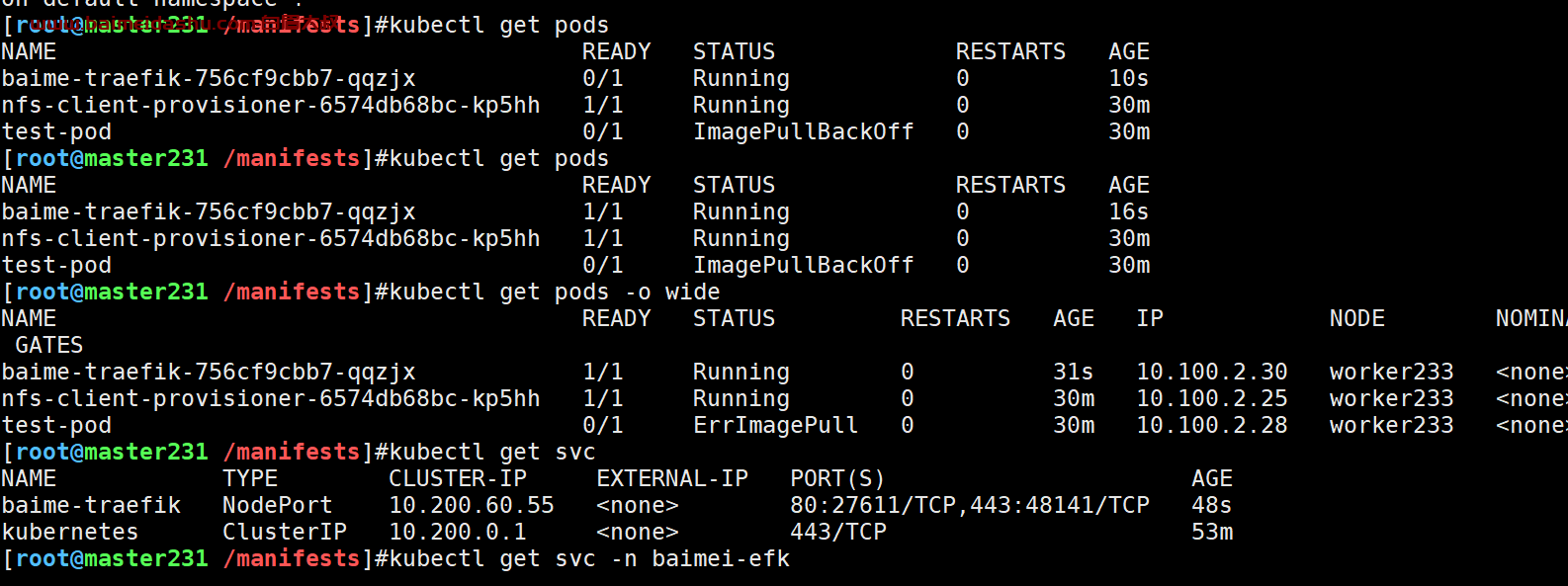

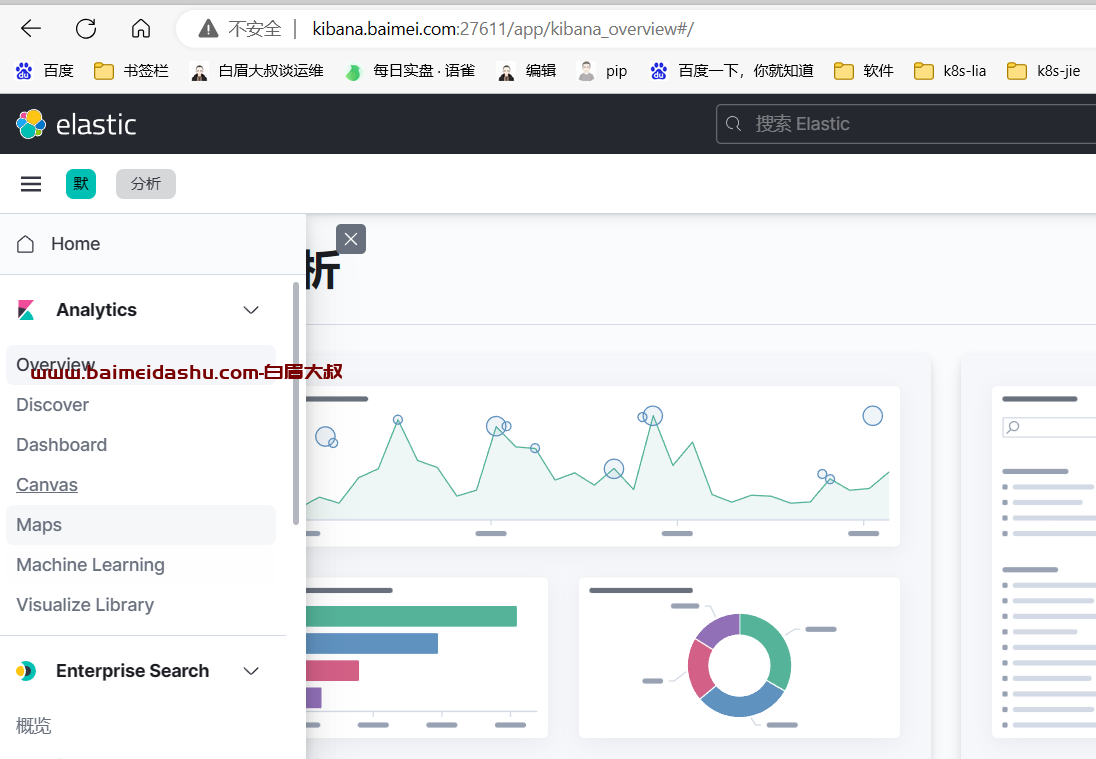

现在正常运行了

但是怎么设置 才可以用域名访问呢:

http://kibana.baimei.com:27611/app/home#/

前提是 把 traefik 起来,

helm install baime-traefik traefik/traefik

查看 ing

kubectl get ing -n baimei-efk

配置 hosts

10.0.0.233 kibana.baimei.com

然后 访问就可以了

51工具盒子

51工具盒子