minikube version: v1.16.0

Pod的分类 {#pod的分类}

Pod的分类:

- 自主式Pod: Pod退出了,此类型的Pod不会被创建

- 控制器管理的Pod:在控制器的生命周期里,始终要维持Pod的副本数目

Init容器 {#init容器}

init c探测模板

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: busybox

command: ["sh", "-c", "echo The app is running! && sleep 3600"]

initContainers:

- name: init-myservice

image: busybox

# myservice对应svc

command:

[

"sh",

"-c",

"until nslookup myservice;do echo waiting for myservice;sleep 2;done;",

]

- name: init-mydb

image: busybox

# 解析mydb的主机名与svc有关如果有mydb对应的svc那么k8s内部的dns会将mydb这个svc解析为对应的ip

command:

[

"sh",

"-c",

"until nslookup mydb;do echo waiting for mydb;sleep 2;done;",

]

创建以下两个svc(service的简称)

myservice

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

mydb

kind: Service

apiVersion: v1

metadata:

name: mydb

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9377

探针 {#探针}

探针是由kubelet对容器执行的定期诊断,要执行诊断,kubelet调用由容器实现的Handler。有三种类型的处理程序

- ExecAction: 在容器内执行指定命令。如果命令退出时返回码为0则认为诊断成功

- TCPSocketAction: 对指定端口上的容器的IP地址进行TCP检查。如果端口打开,则诊断被认为是成功的。

- HTTPGetAction: 对执行的端口和路径上的容器IP地址执行HTTP Get请求。如果响应的状态码大于的等于200且小于400,则诊断被认为是成功的

每次探针都将获得以下三种结果之一

- 成功: 容器通过了诊断。

- 失败:容器未通过诊断

- 未知:诊断失败,因此不会采取任何行动

探测方案有两种

- LivenessProbe: 指示容器是否正在运行。如果存活探测失败,则kubectl会杀死容器,并且容器将受到其重启策略的影响。如果容器不提供存活探针,则默认状态为Success

- ReadinessProbe: 指示容器是否准备好服务请求。如果探测失败,端点控制器将从与Pod匹配的所有Service的端点中删除该Pod的IP地址。初始延迟之前的就绪状态默认为Failure。如果容器不提供就绪探针,则默认状态为Success

探针就绪检测 {#探针就绪检测}

ReadinessProbe-httpget

apiVersion: v1

kind: Pod

metadata:

name: readiness-httpget-pod

namespace: default

spec:

containers:

- name: readiness-httpget-container

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

readinessProbe:

httpGet:

port: 80

path: /index1.html

initialDelaySeconds: 1

periodSeconds: 3

生存检测 {#生存检测}

livenessProbe-exec

apiVersion: v1

kind: Pod

metadata:

name: liveness-exec-pod

spec:

containers:

- name: liveness-exec-container

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

# 启动时创建/temp/live文件经过60s后删除它

# 在liveness检测时启动1分钟内文件都存在容器正常

# 1分钟后文件不存在,liveness检测不通过容器就会被k8s杀死,pod里的容器死亡pod就会重启

command:

[

"/bin/sh",

"-c",

"touch /tmp/live;sleep 60; rm -rf /tmp/live; sleep 3600;",

]

livenessProbe:

exec:

# 测试文件是否存在,如果存在返回值为0表正常

command: ["test", "-e", "/tmp/live"]

initialDelaySeconds: 1

periodSeconds: 3

livenessProbe-httpget

apiVersion: v1

kind: Pod

metadata:

name: liveness-httpget-pod

spec:

containers:

- name: liveness-httpget-container

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

livenessProbe-tcp

apiVersion: v1

kind: Pod

metadata:

name: liveness-tcp-pod

spec:

containers:

- name: nginx

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

livenessProbe:

tcpSocket:

# 监听80端口是否可连接

port: 80

timeoutSeconds: 1

initialDelaySeconds: 5

periodSeconds: 3

Initc、readiness、liveness可以相互配合使用

启动退出动作 {#启动退出动作}

apiVersion: v1

kind: Pod

metadata:

name: lifecycle-demo

spec:

containers:

- name: nginx

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

lifecycle:

# 启动之后

postStart:

exec:

command:

[

"/bin/sh",

"-c",

"echo Hello from the postStart handler > /usr/share/message.txt",

]

# 停止之前可以料理后事

preStop:

exec:

command: ["/bin/sh", "-c", "echo preStop死前料理后事"]

Status状态有以下几种值

-

Pending(挂起): Pod已被

Kubernetes系统接受,但有一个或多个容器镜像尚未创建。等待时间包括调度Pod的时间和通过网络下载镜像的时间 -

Running(运行中):该Pod已经绑定到了一个节点上,Pod中所有的容器都已被创建。至少有一个容器正在运行。或正处于启动或重启状态

-

Succeeded(成功):Pod中的所有容器都被成功终止,并且不会再重启

-

Failed(失败):Pod中的所有容器都已终止了,并且至少有一个容器是因为失败终止。也就是说,容器以非0状态退出或者被系统终止

-

Unknown(未知):因为某些原因无法取得Pod的状态,通常是因为与Pod所在主机通信失败

Replica Sets {#replica-sets}

ReplicaSet(RS)是Replication Controller(RC)的升级版本。ReplicaSet 和 Replication Controller之间的唯一区别是对选择器的支持。ReplicaSet支持labels user guide中描述的set-based选择器要求, 而Replication Controller仅支持equality-based的选择器要求。

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend

spec:

# 指定rs副本数

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- name: nginx

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

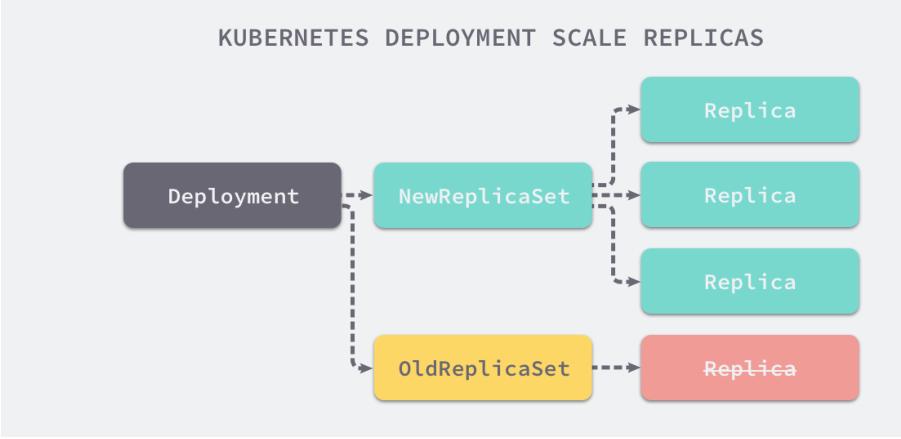

RS与Deployment的关联

Deployment {#deployment}

Deployment为Pod和Replica Set(升级版的 Replication Controller)提供声明式更新。

你只需要在 Deployment 中描述您想要的目标状态是什么,Deployment controller 就会帮您将 Pod 和ReplicaSet 的实际状态改变到您的目标状态。您可以定义一个全新的 Deployment 来创建 ReplicaSet 或者删除已有的 Deployment 并创建一个新的来替换。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.18

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

执行

# record参数会记录执行过程

kubectl apply -f deployment-demo.yaml --record

Deployment使用申明式定义方法所以用apply创建

查看运行状态

$ kubectl get deployment

`NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 3 3 3m37s

`

创建Deployment会被创建RS可以通过以下命令验证

$ kubectl get rs

`NAME DESIRED CURRENT READY AGE

nginx-deployment-76ccf9dd9d 3 3 3 4m14s

`

扩容

$ kubectl scale deployment nginx-deployment --replicas=4

更新镜像

$ kubectl set image deployment/nginx-deployment nginx=nginx:1.19

执行过程,会看到多出一个RS

$ kubectl set image deployment/nginx-deployment nginx=nginx:1.19

deployment.apps/nginx-deployment image updated

$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 1 3 105s

$ kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-67dfd6c8f9 3 3 3 111s

nginx-deployment-7cf55fb7bb 1 1 0 14s

$ kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-67dfd6c8f9 0 0 0 2m

nginx-deployment-7cf55fb7bb 3 3 3 23

回滚(默认回滚到上一个版本)

$ kubectl rollout undo deployment/nginx-deployment

其他常用命令

# 查看回滚状态

$ kubectl rollout status deployment/nginx-deployment

# 查看回滚历史

$ kubectl rollout history deployment/nginx-deployment

# 回退到指定版本通过--to-reversion指定

$ kubectl rollout undo deployment/nginx-deployment --to-reversion=2

# 暂停deployment的更新

$ kubectl rollout pause deployment/nginx-deployment

DemonSet {#demonset}

apiVersion: apps/v1

kind: DaemonSet

metadata:

# step1

name: daemonset-example

labels:

app: daemonset-demo

spec:

selector:

matchLabels:

# 与step1保持一致

name: daemonset-example

template:

metadata:

labels:

name: daemonset-example

spec:

containers:

- name: nginx

image: nginx:1.19.6

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

Job {#job}

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

metadata:

name: pi

spec:

containers:

- name: pi-container

image: perl

# 计算圆周率

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never

查看输出日志就能看到2000位的圆周率输出

$ kubectl logs job/pi

CronJob {#cronjob}

声明式使用apply创建

创建job的操作应该是幂等的

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

# 分 时 日 月 周

schedule: "*/1 * * * *"

jobTemplate:

# job

spec:

template:

# pod

spec:

containers:

- name: hello

image: busybox:1.32

args:

- /bin/sh

- -c

- date;echo Hello from the Kubernetes cluster

restartPolicy: Never

查看cronjob

$ kubectl get cronjob

SVC {#svc}

Deployment与SVC绑定演示

创建Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

spec:

replicas: 3

selector:

matchLabels:

app: myapp

release: stabel

template:

metadata:

labels:

app: myapp

release: stabel

env: test

spec:

containers:

- name: myapp

image: wangyanglinux/myapp:v2

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

创建Service

apiVersion: v1

kind: Service

metadata:

name: myapp

spec:

type: ClusterIP

# 通过标签匹配pod

selector:

app: myapp

release: stabel

ports:

- name: http

port: 80

targetPort: 80

HeadlessService {#headlessservice}

有时不需要或不想要负载均衡以及单独的Service IP。遇到这种情况,可以通过指定Cluster IP(spec.clusterIP)的值为None来创建Headless Service。这类Service并不会分配Cluster IP, kube-proxy不会处理他们,而且平台也不会为他们进行负载均衡和路由

apiVersion: v1

kind: Service

metadata:

name: myapp-headless

namespace: default

spec:

selector:

app: myapp

clusterIP: "None"

ports:

- port: 80

targetPort: 80

创建svc后查看输出像这样

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h

myapp-headless ClusterIP None <none> 80/TCP 26s

SVC一旦创建成功会写入到coredns中去,写入格式为svc名称.所属名称空间名.集群域名可以获取K8S的dns地址

# 如果访问不到 进入minkube容器内部访问 执行: minikube ssh

$ kubectl get pod -n kube-system -o wide

输出像这样

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-54d67798b7-5trfs 1/1 Running 1 23h 172.17.0.2 minikube <none> <none>

etcd-minikube 1/1 Running 1 23h 192.168.49.2 minikube <none> <none>

kube-apiserver-minikube 1/1 Running 1 23h 192.168.49.2 minikube <none> <none>

kube-controller-manager-minikube 1/1 Running 1 23h 192.168.49.2 minikube <none> <none>

kube-proxy-zm25c 1/1 Running 1 23h 192.168.49.2 minikube <none> <none>

kube-scheduler-minikube 1/1 Running 1 23h 192.168.49.2 minikube <none> <none>

storage-provisioner 1/1 Running 2 23h 192.168.49.2 minikube <none> <none>

可以看到coredns

# 通过coredns ip进行svc解析

dig -t A myapp-headless.default.svc.cluster.local. @172.17.0.2

输出结果像这样

docker@minikube:~$ dig -t A myapp-headless.default.svc.cluster.local. @172.17.0.2

; \<\<\>\> DiG 9.16.1-Ubuntu \<\<\>\> -t A myapp-headless.default.svc.cluster.local. @172.17.0.2

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; -\>\>HEADER\<\<- opcode: QUERY, status: NOERROR, id: 34601

;; flags: qr aa rd; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 1a5ce669b9476b67 (echoed)

;; QUESTION SECTION:

;myapp-headless.default.svc.cluster.local. IN A

;; ANSWER SECTION:

myapp-headless.default.svc.cluster.local. 30 IN A 172.17.0.5

myapp-headless.default.svc.cluster.local. 30 IN A 172.17.0.4

myapp-headless.default.svc.cluster.local. 30 IN A 172.17.0.3

`;; Query time: 3 msec

;; SERVER: 172.17.0.2#53(172.17.0.2)

;; WHEN: Wed Jan 20 07:55:14 UTC 2021

;; MSG SIZE rcvd: 249

`

NodePort {#nodeport}

将服务暴露给外部用户,nodePort的原理在于在node上开了一个端口,将该端口的流量导入到kube-proxy,然后由kube-proxy进一步导给对应的pod

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

type: NodePort

selector:

app: myapp

ports:

- name: http

port: 80

targetPort: 80

LoadBalancer {#loadbalancer}

LoadBalancer和NodePort其实是同一种方式,区别在于LoadBalancer比NodePort多了一步,就是可以调用cloud provider去创建LB来向节点导流

ExternalName

这种类型的Service通过返回CNAME和它的值,可以将服务映射到externalName字段的内容例如:k8s.guqing.xyz。ExternalName Service是Service的特例,它没有selector,也没有定义任何的端口Endpoint。相反的,对于运行在集群外部的服务,它通过返回该外部服务的别名这种方式提供服务

kind: Service

apiVersion: v1

metadata:

name: my-service-1

namespace: default

spec:

type: ExternalName

externalName: my.database.example.com

当查询主机my-service.default.svc.cluster.local(格式为:SVC_NAME.NAMESPACE.svc.cluster.local)时,集群的DNS服务将返回一个值 my.database.example.com的CNAME记录。访问这个服务的工作方式和其他相同,唯一不同的是重定向发生在DNS层,而且不会进行代理或转发

创建svc如下

$ kubectl get svc

`NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d

my-service-1 ExternalName <none> my.database.example.com <none> 3s

`

解析过程如下

docker@minikube:~$ dig -t A my-service-1.default.svc.cluster.local. @172.17.0.2

; \<\<\>\> DiG 9.16.1-Ubuntu \<\<\>\> -t A my-service-1.default.svc.cluster.local. @172.17.0.2

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; -\>\>HEADER\<\<- opcode: QUERY, status: NOERROR, id: 24122

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 9ba11bfc7ec49fd9 (echoed)

;; QUESTION SECTION:

;my-service-1.default.svc.cluster.local. IN A

;; ANSWER SECTION:

my-service-1.default.svc.cluster.local. 30 IN CNAME my.database.example.com.

`;; Query time: 1007 msec

;; SERVER: 172.17.0.2#53(172.17.0.2)

;; WHEN: Fri Jan 22 08:53:19 UTC 2021

;; MSG SIZE rcvd: 154

`

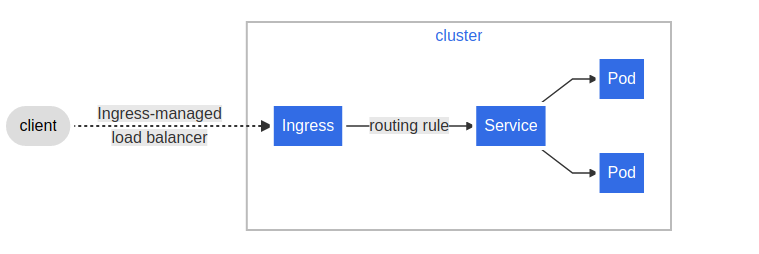

Ingress-Nginx {#ingress-nginx}

Here is a simple example where an Ingress sends all its traffic to one Service:

minikube安装ingress-nginx

$ minikube addons enable ingress

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.43.0/deploy/static/provider/baremetal/deploy.yaml

验证安装

$ kubectl get pods -n ingress-nginx

Ingress HTTP代理访问 {#ingress-http代理访问}

deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dm

spec:

replicas: 2

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: wangyanglinux/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

Service

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

name: nginx

ports:

- port: 80

targetPort: 80

protocol: TCP

Ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-test

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: www1.guqing.xyz

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

执行过程

$ minikube addons enable ingress

* The 'ingress' addon is enabled

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.43.0/deploy/static/provider/baremetal/deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

$ kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-58mz2 0/1 ContainerCreating 0 11s

ingress-nginx-admission-patch-s9c2c 0/1 ContainerCreating 0 11s

ingress-nginx-controller-697b57b496-hj9cb 0/1 ContainerCreating 0 12s

创建deployment

============

$ kubectl apply -f deployment.yaml

创建svc

=====

$ kubectl apply -f svc.yaml

service/nginx-svc created

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 \<none\> 443/TCP 4m47s

nginx-svc ClusterIP 10.103.242.217 \<none\> 80/TCP 2m41s

创建ingress

=========

$ kubectl apply -f ingress.yaml

$ kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.111.21.135 \<none\> 80:30793/TCP,443:30261/TCP 7m10s

ingress-nginx-controller-admission ClusterIP 10.102.127.39 \<none\> 443/TCP 7m10s

查看访问地址

======

$ minikube service -n ingress-nginx ingress-nginx-controller --url

http://172.17.0.16:30162

http://172.17.0.16:30747

$ vim /etc/hosts

添加记录指向ingress域名

===============

172.17.0.16 www1.guqing.xyz

===========================

`$ curl www1.guqing.xyz

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

`

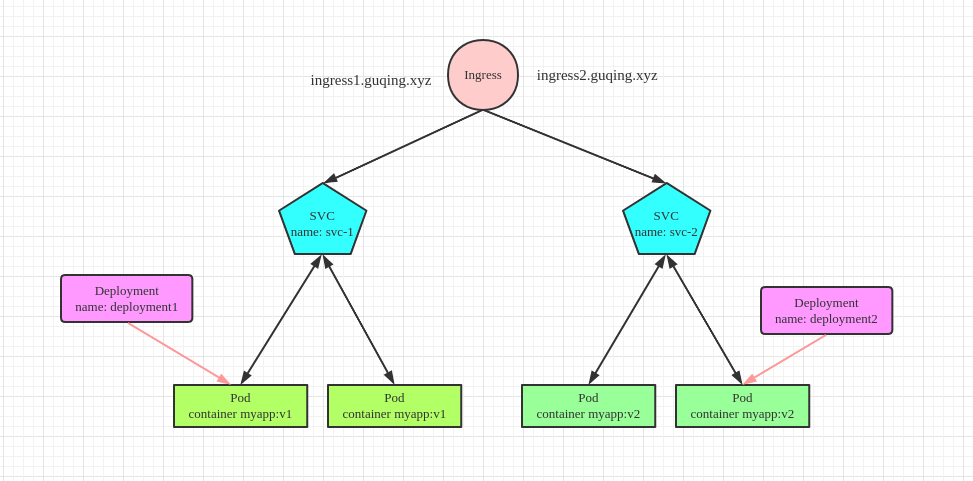

Ingress域名代理访问示例 {#ingress域名代理访问示例}

以下步骤将在K8S提供的Minikube终端下运行 Launch Terminal

minikube version: v1.8.1

结构图示如下:

- 启动ingress插件

$ minikube addons enable ingress

* The 'ingress' addon is enabled

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.43.0/deploy/static/provider/baremetal/deploy.yaml

`namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

`

- 创建两个deployment和两个svc分别对应

deployment-svc-1.yaml标签为name=nginx1,容器名nginx1并与svc-1绑定

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment1

spec:

replicas: 2

selector:

matchLabels:

name: nginx1

template:

metadata:

labels:

name: nginx1

spec:

containers:

- name: nginx1

# 使用v1版本

image: wangyanglinux/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc-1

spec:

selector:

name: nginx1

ports:

- port: 80

targetPort: 80

protocol: TCP

deployment-svc-2.yaml标签为name=nginx2,容器名nginx2并与svc-2绑定

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment2

spec:

replicas: 2

selector:

matchLabels:

name: nginx2

template:

metadata:

labels:

name: nginx2

spec:

containers:

- name: nginx2

# 使用v2版本

image: wangyanglinux/myapp:v2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc-2

spec:

selector:

name: nginx2

ports:

- port: 80

targetPort: 80

protocol: TCP

创建

$ kubectl apply -f deployment-svc-1.yaml

$ kubectl apply -f deployment-svc-2.yaml

验证是否符合预期

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 31m

svc-1 ClusterIP 10.98.45.49 <none> 80/TCP 23m

svc-2 ClusterIP 10.98.55.46 <none> 80/TCP 20m

访问svc-1

=======

$ curl 10.98.45.49

Hello MyApp \| Version: v1 \| \<a href="hostname.html"\>Pod Name\</a\>

访问svc-2

=======

`$ curl 10.98.55.46

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

`

- 创建Ingress规则(

ingress-rules.yaml),创建ingress-1与svc-1绑定,ingress-2与svc-2绑定

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-1

spec:

rules:

- host: ingress1.guqing.xyz

http:

paths:

- path: /

backend:

serviceName: svc-1

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-2

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: ingress2.guqing.xyz

http:

paths:

- path: /

backend:

serviceName: svc-2

servicePort: 80

创建

$ kubectl apply -f ingress-rules.yaml

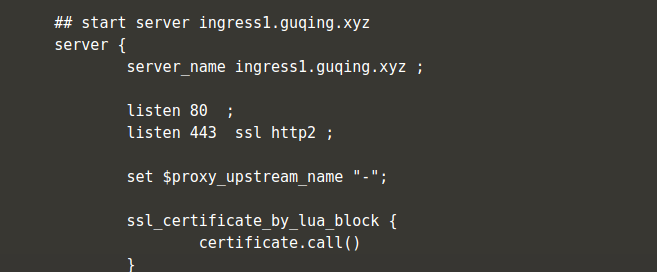

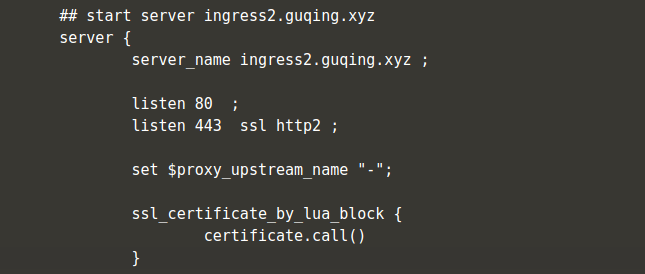

进入到ingress容器中查看nginx配置

$ kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-fw2r6 0/1 Completed 0 38m

ingress-nginx-admission-patch-rsh8f 0/1 Completed 0 38m

ingress-nginx-controller-697b57b496-r8vpk 1/1 Running 0 38m

$ kubectl exec ingress-nginx-controller-697b57b496-r8vpk -n ingress-nginx -it -- /bin/bash

bash-5.0$ cat /etc/nginx/nginx.conf

此时会看到nginx的配置文件中自动被配置了ingress-rules.yaml中设置的两个host对应的域名

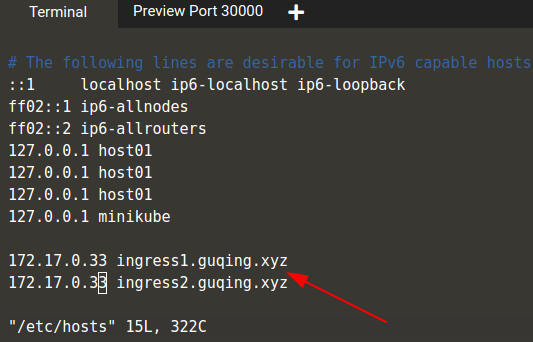

- 配置

hosts文件模拟访问域名

$ kubectl get ingress

NAME HOSTS ADDRESS PORTS AGE

ingress-1 ingress1.guqing.xyz 172.17.0.33 80 4m1s

ingress-2 ingress2.guqing.xyz 172.17.0.33 80 4m1s

`$ vim /etc/hosts

`

查看访问端口

$ kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.110.79.62 <none> 80:31394/TCP,443:30567/TCP 4m1s

ingress-nginx-controller-admission ClusterIP 10.110.32.131 <none> 443/TCP 4m1s

访问

$ curl ingress1.guqing.xyz:31394

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

`$ curl ingress2.guqing.xyz:31394

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

`

Ingress HTTPS代理访问 {#ingress-https代理访问}

创建证书及cert存储方式

$ open ssl req -x500 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc"

`$ kubectl create secret tls tls-secret --key tls.crt

`

Ingress配置示例

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-3

spec:

tls:

- hosts:

- foo.bar.com

# 与tls指定的名称一致

secretName: tls-secret

rules:

- host: foo.bar.com

http:

paths:

- path: /

backend:

serviceName: svc-3

servicePort: 80

Nginx Basic Auth {#nginx-basic-auth}

$ yum -y install httpd

# 创建文件为auth 用户名为foo

$ htpasswd -c auth foo

$ kubectl create secret generic basic-auth --form-file=auth

创建ingress yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-with-auth

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: "Authentication Required - foo"

spec:

rules:

- host: foo2.bar.com

http:

paths:

- path: /

backend:

serviceName: svc-4

servicePort: 80

Nginx重写 {#nginx重写}

| 名称 | 描述 | 值 | |------------------------------------------------|---------------------------------------|----| | nginx.ingress.kubernets.io/rewrite-target | 必须重定向流量到目标URL | 串 | | nginx.ingress.kubernetes.io/ssl-redirect | 指定位置部分是否仅可访问SSL(当Ingress包含证书时默认为True) | 布尔 | | nginx.ingress.kubernetes.io/force-ssl-redirect | 即使Ingress未启用TLS,也强制重定向到HTTPS | 布尔 | | nginx.ingress.kubernetes.io/app-root | 定义Controller必须重定向的应用程序根,如果它在/上下文中 | 串 | | nginx.ingress.kubernetes.io/use-regex | 指示Ingress上定义的路径是否使用正则表达式 | 布尔 |

示例

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-rewrite

annotations:

nginx.ingress.kubernetes.io/rewrite-target: http://foo.bar.com:31795/hostname.html

spec:

rules:

- host: foo3.bar.com

http:

paths:

- path: /

backend:

serviceName: svc-5

servicePort: 80

存储机制 {#存储机制}

Config Map {#config-map}

ConfigMap功能在Kubernetes1.2版本中引入,许多应用程序会从配置文件、命令行参数或环境变量中读取配置信息。ConfigMap API给我们提供了向容器中注入配置信息的机制,ConfigMap可以被用来保存单个属性,也可以用来保存整个配置文件或者JSON二进制大对象

ConfigMap的创建 {#configmap的创建}

1.使用目录创建 {#1使用目录创建}

$ ls docs/user-guide/configmap/kubectl/

game.properties

ui.properties

$ cat docs/user-guide/config/kubectl/game.properties

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

$ cat docs/user-guide/configmap/kubectl/ui.properties

color.good=purple

color.bad=yellow

allow.textmode=true

how.nice.to.look=fairlyNice

`$ kubectl create configmap game-config --from-file=docs/user-guide/configmap/kubectl

`

--from-file指定在目录下的所有文件都会被用在ConfigMap里面创建一个键值对,键的名称就是文件名,值就是文件内容

$ kubectl get configmap

NAME DATA AGE

game-config 2 30s

kube-root-ca.crt 1 7d22h

查看具体信息内容像这样(cm是configmap的简称)

$ kubectl get cm game-config -o yaml

apiVersion: v1

data:

game.properties: |

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

ui.properties: |

color.good=purple

color.bad=yellow

allow.textmode=true

how.nice.to.look=fairlyNice

kind: ConfigMap

metadata:

creationTimestamp: "2021-01-27T06:57:37Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:game.properties: {}

f:ui.properties: {}

manager: kubectl-create

operation: Update

time: "2021-01-27T06:57:37Z"

name: game-config

namespace: default

resourceVersion: "513536"

uid: 74e9713b-8b5f-4512-a348-8691eb5afaca

2. 使用文件创建 {#2-使用文件创建}

只要指定为一个文件就可以从单个文件中创建ConfigMap

$ kubectl create configmap game-config-2 --from-file=docs/user-guide/configmap/kubectl/game.properties

`$ kubectl get configmaps game-config-2 -o yaml

`

--from-file这个参数可以使用多次,例如可以使用两次分别指定上个示例中两个配置文件,效果就和指定整个目录一样

3.使用字面值创建 {#3使用字面值创建}

使用文字值创建,利用--from-literal参数传递配置信息,该参数可以使用多次,格式如下:

$ kubctl create configmap special-config --from-literal=special.how=very --from-literal=special.type=charm

`$ kubectl get configmaps special-config -o yaml

`

Pod中使用ConfigMap {#pod中使用configmap}

1.使用ConfigMap来替代环境变量 {#1使用configmap来替代环境变量}

apiVersion: v1

kind: ConfigMap

metadata:

name: special-config

namespace: default

data:

special.how: very

special.type: charm

apiVersion: v1

kind: ConfigMap

metadata:

name: env-config

namespace: default

data:

log_level: INFO

apiVersion: v1

kind: Pod

metadata:

name: dapi-test-pod

spec:

containers:

- name: test-container

image: nginx:1.19.6

command: ["/bin/sh", "-c", "env"]

env:

- name: SPECIAL_LEVEL_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.how

- name: SPECIAL_TYPE_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.type

envFrom:

- configMapRef:

name: env-config

restartPolicy: Never

查看pod日志效果如下

$ kubectl logs dapi-test-pod

KUBERNETES_SERVICE_PORT=443

KUBERNETES_PORT=tcp://10.96.0.1:443

NGINX_SVC_SERVICE_HOST=10.102.29.151

HOSTNAME=dapi-test-pod

HOME=/root

NGINX_SVC_PORT=tcp://10.102.29.151:80

NGINX_SVC_SERVICE_PORT=80

PKG_RELEASE=1~buster

# special.type=charm

SPECIAL_TYPE_KEY=charm

NGINX_SVC_PORT_80_TCP_ADDR=10.102.29.151

NGINX_SVC_PORT_80_TCP_PORT=80

NGINX_SVC_PORT_80_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

NGINX_VERSION=1.19.6

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

KUBERNETES_PORT_443_TCP_PORT=443

NJS_VERSION=0.5.0

KUBERNETES_PORT_443_TCP_PROTO=tcp

NGINX_SVC_PORT_80_TCP=tcp://10.102.29.151:80

# special.how=very

SPECIAL_LEVEL_KEY=very

# log_level=INFO

log_level=INFO

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_SERVICE_HOST=10.96.0.1

PWD=/

2.用ConfigMap设置命令行参数 {#2用configmap设置命令行参数}

apiVersion: v1

kind: ConfigMap

metadata:

name: special-config

namespace: default

data:

special.how: very

special.type: charm

apiVersion: v1

kind: Pod

metadata:

name: configmap-test-pod

spec:

containers:

- name: test-container

image: nginx:1.19.6

command:

["/bin/sh", "-c", "echo $(SPECIAL_LEVEL_KEY) $(SPECIAL_TYPE_KEY)"]

env:

- name: SPECIAL_LEVEL_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.how

- name: SPECIAL_TYPE_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.type

restartPolicy: Never

查看日志效果如下

$ kubectl logs configmap-test-pod

very charm

3.通过数据卷插件使用ConfigMap {#3通过数据卷插件使用configmap}

apiVersion: v1

kind: ConfigMap

metadata:

name: special-config

namespace: default

data:

special.how: very

special.type: charm

在数据卷里面使用这个ConfigMap,有不同的选项。最基本的就是将文件填入数据卷,在这个文件中,键就是文件名,键值就是文件内容

apiVersion: v1

kind: Pod

metadata:

name: configmap-volume-pod

spec:

containers:

- name: test-container

image: nginx:1.19.6

command:

["/bin/sh", "-c", "ls /etc/config/ && cat /etc/config/special.how"]

volumeMounts:

- name: config-volume

mountPath: /etc/config

volumes:

- name: config-volume

configMap:

name: special-config

restartPolicy: Never

查看pod日志如下

$ kubectl logs configmap-volume-pod

# 两个文件

special.how

special.type

# special.how内容

very

ConfigMap热更新 {#configmap热更新}

apiVersion: v1

kind: ConfigMap

metadata:

name: log-config

namespace: default

data:

log_level: INFO

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

replicas: 1

selector:

matchLabels:

run: my-nginx

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx:1.19.6

ports:

- containerPort: 80

volumeMounts:

- name: config-volume

mountPath: /etc/config

volumes:

- name: config-volume

configMap:

name: log-config

创建后查看内容

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

my-nginx-854969cf95-fmmp9 1/1 Running 0 23s

$ kubectl exec my-nginx-854969cf95-fmmp9 -it -- cat /etc/config/log_level

# 结果为INFO

INFO

此时修改ConfigMap

$ kubectl edit configmap log-config

修改log_level的值未DEBUG等待约10s后再次查看环境变量的值

$ kubectl exec my-nginx-854969cf95-fmmp9 -icat /etc/config/log_level

# 值变为了DEBUG

DEBUG

Secret {#secret}

Secret解决了密码、token、密钥等名干数据的配置问题,而不需要把这些敏感数据暴露到镜像或者Pod Spec中。Secret可以以Volume或者环境变量的方式使用

Secret有三种类型:

- Service Account: 用来访问Kubernetes API, 由Kubernetes自动创建,并且会自动挂载到Pod的

/run/secrets/kubernetes.io/serviceaccount目录中 - Opaque: base64编码格式的Secret,用来存储密码、密钥等

- kubernetes.io/dockerconfigjson: 用来存储私有docker registry的认证信息

Service Account {#service-account}

Service Account 用来访问Kubernetes API, 由Kubernetes自动创建,并且会自动挂载到Pod的/run/secrets/kubernetes.io/serviceaccount目录中

Opaque Secret {#opaque-secret}

- 创建说明

Opaque类型的数据是一个map类型,要求value是base64编码格式

$ echo -n "admin" | base64

YWRtaW4=

$ echo -n "1f2d1e2e67df" | base64

MWYyZDFlMmU2N2Rm

secrets.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

username: YWRTAW4=

password: MWYyZDFlMmU2N2Rm

执行过程

$ vim secrets.yaml

# 填充上述 secrets.yaml内容

$ kubectl apply -f secrets.yaml

secret/mysecret created

$ kubectl get secret

NAME TYPE DATA AGE

default-token-kxgcl kubernetes.io/service-account-token 3 8d

mysecret Opaque

可以看到默认有一个default-token,k8s会为每一个 namepace下创建一个SA(Service Account)用于Pod挂载

- 使用方式

方式一:将secret挂载到Volume中

apiVersion: v1

kind: Pod

metadata:

name: secret-test

labels:

name: secret-test

spec:

volumes:

- name: secrets

secret:

secretName: mysecret

containers:

- name: db

image: nginx:1.19.6

volumeMounts:

- name: secrets

mountPath: /etc/secrets

readOnly: true

执行示例

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

secret-test 1/1 Running 0 6s

$ kubectl exec secret-test -it -- /bin/sh

> ls

password username

> cat username

admin

> cat password

1f2d1e2e67df

方式二:将Secret导入到环境变量中

apiVersion: apps/v1

kind: Deployment

metadata:

name: pod-deployment

spec:

replicas: 2

selector:

matchLabels:

app: pod-deployment

template:

metadata:

labels:

app: pod-deployment

spec:

containers:

- name: pod-1

image: nginx:1.19.6

ports:

- containerPort: 80

env:

- name: TEST_USER

valueFrom:

secretKeyRef:

name: mysecret

key: username

- name: TEST_PASSWORD

valueFrom:

secretKeyRef:

name: mysecret

key: password

执行示例:

$ kubectl apply -f pod-deployment.yaml

deployment.apps/pod-deployment created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-deployment-6b988699d-ql596 1/1 Running 0 3s

pod-deployment-6b988699d-vwtcw 1/1 Running 0 3s

$ kubectl exec pod-deployment-6b988699d-ql596 -it -- /bin/sh

> echo $TEST_USER

admin

> echo $TEST_PASSWORD

1f2d1e2e67df

kubernetes.io/dockerconfigjson {#kubernetesiodockerconfigjson}

使用Kubeclt创建docker registry认证的secret

$ kubectl create secret docker-registry myregistrykey --docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKERUSER --docker-password=DOCKER_PASSWORD --docker-email=DOCKER_EMAIL

`secret "myregistrykey" created

`

在创建Pod的时候,通过imagePullSecrets来引用刚创建的myregistrykey

apiVersion: v1

kind: Pod

metadata:

name: foo

spec:

containers:

- name: foo

# 私有镜像

image: guching/nginx:1.19.6

imagePullSecrets:

- name: myregistrykey

使用hub.docker.com仓库示例

$ kubectl create secret docker-registry myregistrykey --docker-username=guching --docker-password=xxx

secret/myregistrykey created

# foo-pod.yaml内容如上

$ kubectl create -f foo-pod.yaml

pod/foo created

$ kubectl get pods -w

NAME READY STATUS RESTARTS AGE

foo 0/1 ContainerCreating 0 3s

# 失败了一次

foo 0/1 ErrImagePull 0 34s

# 运行成功

foo 1/1 Running 0 2m36s

Volume {#volume}

容器磁盘上的文件的生命周期是短暂的,这就使得在容器中运行重要应用时会出现一些问题。首先,当容器崩溃时,kubelet会重启它,但是容器中的文件将丢失------容器以干净的状态(镜像最初的状态)重新启动,其次,在Pod中同时运行多个容器时,这些容器之间通常需要共享文件。Kubernetes中的Volume抽象就很好的解决了这些问题。

背景 {#背景}

Kubernetes中的卷有明确的寿命------与封装它的Pod相同。所以,卷的生命比Pod中的所有容器都长,当这个容器重启时数据仍然得以保存。当然,当Pod不再存在时,卷将不复存在。也许更重要的是,Kubernetes支持多种类型的卷,Pod可以同时使用任意数量的卷

卷的类型 {#卷的类型}

Kubernetes支持以下类型的卷:

awsElasticBlockStore、azureDisk、azureFile、cephfs、csi、downwardAPI、emptyDirfc、flocker、gcePersistentDisk、gitRepo、glusterfs、hostPath、iscsi、nfspersistentVolumeClaim、projected、portworxVolume、quobyte、rbd、scaleIO、secretstorageos、vsphereVolume

emptyDir {#emptydir}

当Pod被分配给节点时,首先会创建emptyDir卷,并且只要该POd在该节点上运行,该卷就会存在。正如卷的名字所述,它最初是空的。Pod中容器可以读取和写入emptyDir卷中的相同文件,尽管该卷可以挂载到每个容器中的相同或不同路径上。当出于任何原因从节点中删除Pod时,emptyDir中的数据将被永久删除(注:容器崩溃时不会从节点中移除Pod,因此emptyDir卷中的数据在容器崩溃时是安全的)

emptyDir的用法有:

- 暂存空间,例如用于基于磁盘的合并排序

- 用作长时间计算崩溃恢复时的检查点

- Web服务器容器提供数据时,保存内容管理器容器提取的文件

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: test-container

image: nginx:1.19.6

volumeMounts:

- mountPath: /cache

# 挂载cache-volume卷

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

不同的容器可以使用不同的mountPath指向同一个卷例如

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: test-container

image: nginx:1.19.6

volumeMounts:

- mountPath: /cache

name: cache-volume

- name: busybox

image: busybox:1.32

imagePullPolicy: IfNotPresent

command: ["/bin/sh", "-c", "sleep 3600s"]

volumeMounts:

- mountPath: /test

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

如上test-container容器的卷路径为cache,而busybox容器的卷路径为test都是指向同一个卷cache-volume内容是共享的只是路径不同

hostPath {#hostpath}

hostPath卷将主机节点的文件系统中的文件或目录挂载到集群中

hostPath的用途如下:

- 运行需要访问Docker内部的容器,使用

/var/lib/docker的hostPath - 在容器中运行

cAdvisor使用/dev/cgroups的hostPath

除了所需要的path属性之外,用户还可以为hostPath卷指定type

| 值 | 行为 | |-------------------|-----------------------------------------------------------------| | | 空白字符串(默认)用于向后兼容,这意味着在挂载hostPath卷之前不会执行任何检查 | | DirectoryOrCreate | 如果在给定的路径上没有任何东西存在,那么将根据需要在那里创建一个空目录,权限设置为755,与Kubelet具有相同的组和所有权 | | Directory | 给定的路径下必须存在目录 | | FileOrCreate | 如果在给定的路径上没有任何东西存在,那么会根据需要创建一个空文件,权限设置为0644,与Kubelet具有相同的组和所有权 | | File | 给定的路径下必须存在文件 | | Socket | 给定的路径下必须存在UNIX套接字 | | CharDevice | 给定的路径下必须存在字符设备 | | BlockDevice | 给定的路径下必须存在块设备 |

使用这种卷类型时请注意:

- 由于每个节点上的文件都不同,具有相同的配置(例如从PodTemplate创建)的Pod在不同节点上的行为可能会有所不同

- 当Kubernetes按照计划添加资源感知调度时,将无法考虑hostPath使用的资源

- 在底层主机上创建的文件或目录只能由root写入。你需要在特权容器中以root身份运行进程,或修改主机上的文件权限以便写入hostPath卷

apiVersion: v1

kind: Pod

metadata:

name: test-pod-1

spec:

containers:

- name: test-container

image: nginx:1.19.6

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

hostPath:

# 主机中的目录路径

path: /data

# 类型,可选值

type: Directory

注意:如果使用minikube driver=docker方式执行则hostPath.path在minikube容器中

Persistent Volume {#persistent-volume}

Persistent Volume简称PV,是由管理员设置的存储,它是集群的一部分,就像节点是集群中的资源一样,PV也是集群中的资源。PV是Volume之类的卷插件,但具有独立于使用PV的Pod的生命周期。此API对象包含存储实现的细节,即NFS、ISCSI或特定与云供应商的存储系统

PersistentVolumesClaim

PersistentVolumesClaim是用户存储的请求,简称PVC。它与Pod相似,Pod消耗节点资源,PVC消耗PV资源。Pod可以请求特定级别的资源(CPU和内存)。声明可以请求特定的大小和访问模式(例如,可以读/写一次或只读多次模式挂载)

静态PV

集群管理员创建一些PV,他们带有可供集群用户使用的实际存储的细节。他们存在与Kubernetes API中,可以用于消费

动态

当管理员创建的静态PV都不匹配用户的PersistentVolumeClaim时,集群可能会尝试动态的为PVC创建卷。此配置基于StorageClasses:PVC必须请求[存储类],并且管理员必须创建并配置该类才能进行动态创建。声明该类为""可以有效的禁用其动态配置

要器用基于存储级别的动态存储配置,集群管理员需要器用API server上的DefaultStorageClass[准入控制器]。例如,通过确保DefaultStorageClass位于API Server组建的--admission-control标志,使用逗号分隔

44

51工具盒子

51工具盒子