英文:

Pyspark - create our python package

问题 {#heading}

在Synapse Notebook中,我有一个单独的笔记本,其中包含我需要在其他笔记本中运行的所有函数。

如果我创建一个新的笔记本,例如,在第一个命令中,我会输入

%run ETL Functions来使用所有这些函数。

所以,例如,在ETL笔记本中,我可以有这个函数:

def calculate_square(x):

return x ** 2

我想创建一个包,并将例如这个函数插入进去,之后我可以这样做:

from test_package import functions

result = functions.calculate_square(5)

print(result) # 输出:25

这样,我就不需要每次需要使用特定函数时都运行ETL Function笔记本。

我的想法是,但我不知道是否可能的是,保留ETL Functions笔记本,然后在该笔记本内获取函数并将它们插入到包中,该包将分配给集群。也就是说,如果我需要添加另一个函数,我只需进入笔记本,插入函数并单独运行笔记本,该函数将自动可用于包中。因此,我还可以定义不同的集群并在不同的集群中使用此包。

但我对其他想法也很乐意接受,我之所以提出这个想法,是因为版本控制和传递到不同环境更容易,我认为。

有人有类似的经验吗?

非常感谢! 英文:

In Synpase Notebook I've a separate notebook that contains all the functions that I need to run in another notebooks.

If I create a new notebook, for example, in first command I'll put

%run ETL Functions to use all the functions.

So for example inside that ETL notebook I can have this function:

def calculate_square(x):

return x ** 2

and I want to create a package and insert for example this function and after I can do this:

from test_package import functions

`result = functions.calculate_square(5)

print(result) # Output: 25

`

so with that I don't need to run the ETL Function notebook every time I need to use a specific function.

My idea, but I don't know if it is possible, is to keep the ETL Functions notebook, and inside that notebook take the functions and insert them into the package and this package will be allocated to the cluster. That is, if I need to add another function, I just have to go to the notebook, insert the function and run the notebook separately and the function will automatically be available in the package. So I can also define different clusters and use this package in different clusters.

but I'm receptive to other ideas, this idea of mine was more because version control and passing to different environments is easier, I think.

Does anyone have similar experience?

Thanks a lot!

答案1 {#1}

得分: 1

使用仅有的两个笔记本,你无法创建包的功能,但你可以编写自定义函数到一个 .py 文件中,然后与这两个笔记本一起导入。

你可以按照以下步骤进行操作。

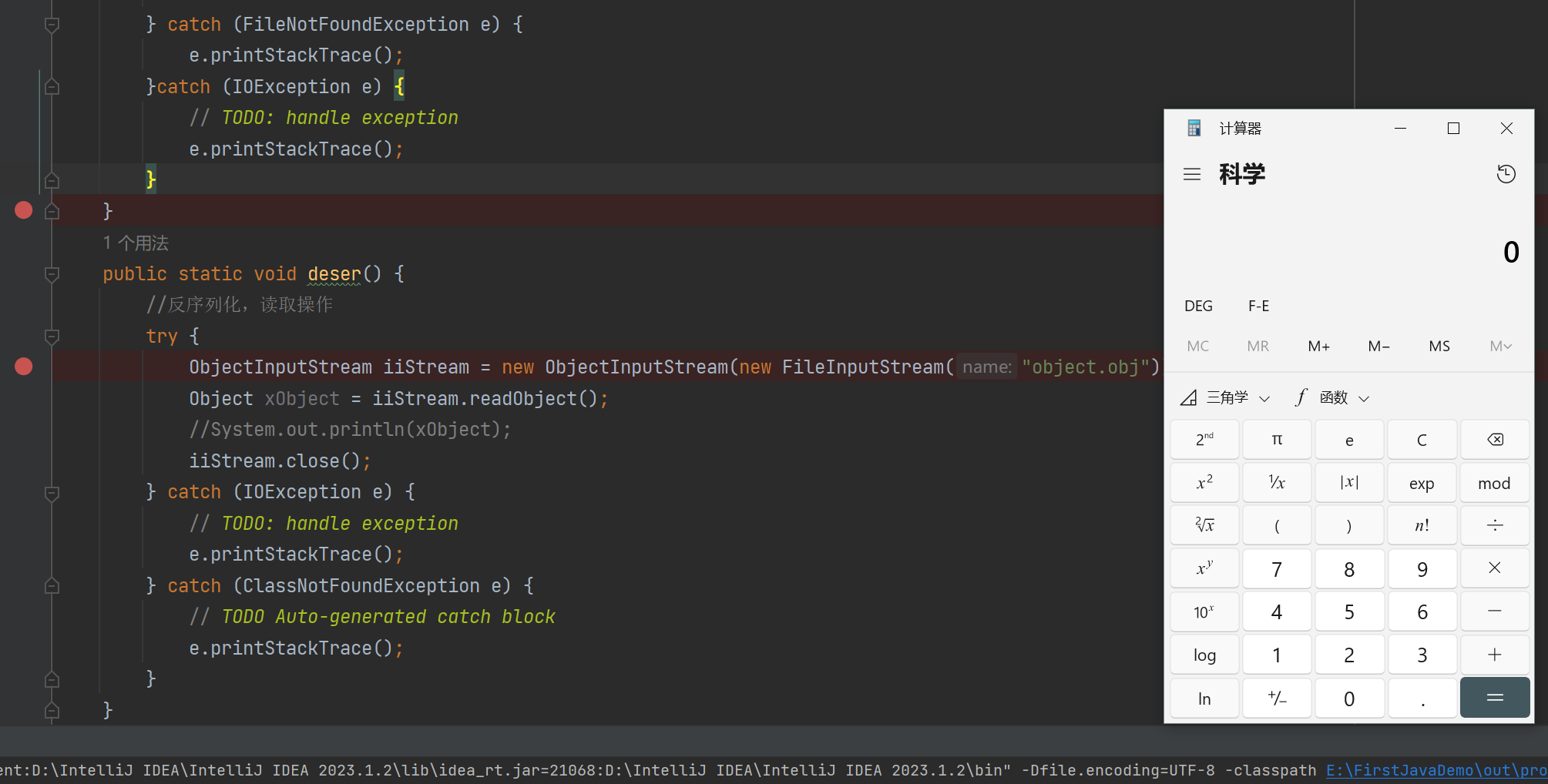

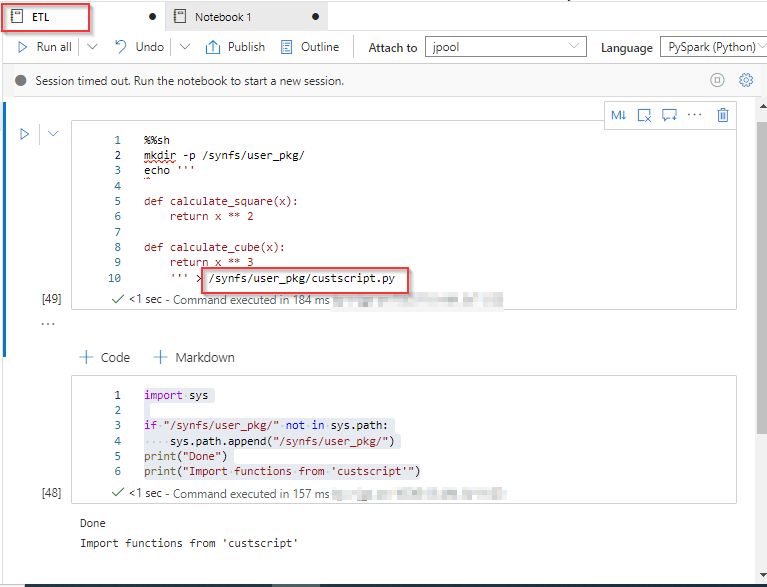

首先,在ETL笔记本中添加以下代码块。

%%sh

mkdir -p /synfs/user_pkg/

echo '''

def calculate_square(x):

return x ** 2

def calculate_cube(x):

return x ** 3

''' > /synfs/user_pkg/custscript.py

在这里,我将这些函数添加到 Synapse 文件系统中。

然后,通过将此路径添加到系统中,你可以导入这些函数。

以下是用于添加到系统路径的代码。

import sys

if "/synfs/user_pkg/" not in sys.path:

sys.path.append("/synfs/user_pkg/")

print("Done")

print("Import functions from 'custscript'")

在ETL笔记本中按照以下方式添加上述代码块。

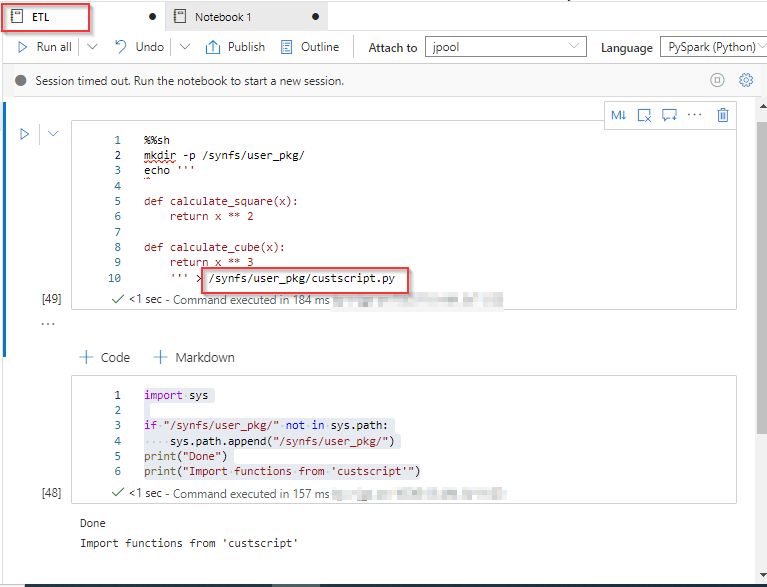

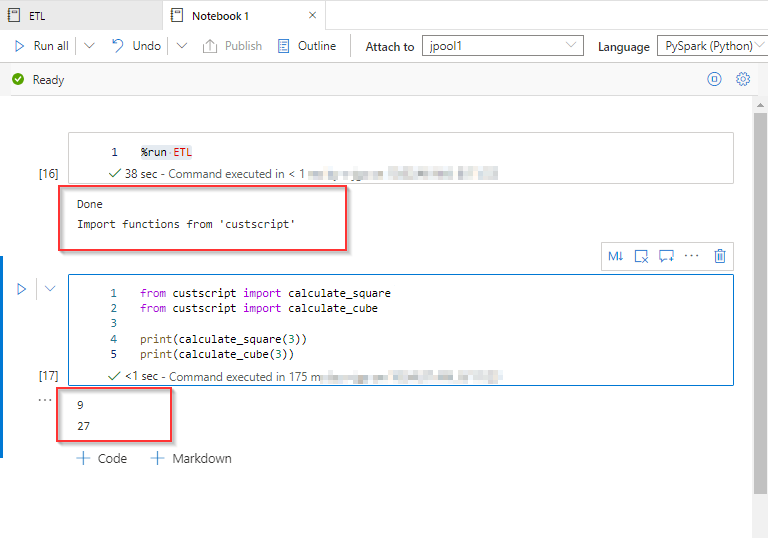

然后只需从另一个笔记本中调用一次。

%run ETL

和

from custscript import calculate_square

from custscript import calculate_cube

print(calculate_square(3))

print(calculate_cube(3))

输出:

如果你想要插入任何其他函数,请在ETL 笔记本的echo命令中添加该函数并运行一次。

英文:

With using only 2 notebook you cannot create package kind of functionality, but what you can do is write your custom functions to some .py file along with these 2 notebooks and import it.

You can follow below steps for it.

First, in ETL notebook add below code blocks.

%%sh

mkdir -p /synfs/user_pkg/

echo '''

def calculate_square(x):

return x ** 2

def calculate_cube(x):

return x ** 3

''' > /synfs/user_pkg/custscript.py

Here, I am adding these functions inside synapse filesystem.

Then by adding this path to system, you can import these functions.

Below is code for adding into system path.

import sys

if "/synfs/user_pkg/" not in sys.path:

sys.path.append("/synfs/user_pkg/")

print("Done")

print("Import functions from 'custscript'")

Add above code blocks as below in ETL notebook.

Then call it from another notebook only once.

%run ETL

and

from custscript import calculate_square

from custscript import calculate_cube

`print(calculate_square(3))

print(calculate_cube(3))

`

Output:

If you want to insert any other function add that inside

echo command in ETL notebook and run it once.

51工具盒子

51工具盒子