先说新方式结论:不论什么CPU架构和操作系统,只要在线能安装的,统统都可以离线安装的。

适配arm麒麟过程中和文章格式有参考运维有术公众号相关文章 运维有术,公众号:运维有术ARM 版 Kylin V10 部署 KubeSphere v3.4.0 不完全指南

本文将详细介绍,如何基于鲲鹏CPU(arm64) 和操作系统 Kylin V10 SP2/SP3 ,利用 KubeKey 制作 KubeSphere 和 Kubernetes 离线安装包,并实战部署 KubeSphere 3.3.1 和 Kubernetes 1.22.12 集群。

服务器配置

|----------|---------------|-------------|---------------|----------------------------| | 主机名 | IP | CPU | OS | 用途 | | master-1 | 192.168.10.2 | Kunpeng-920 | Kylin V10 SP2 | 离线环境 KubeSphere/k8s-master | | master-2 | 192.168.10.3 | Kunpeng-920 | Kylin V10 SP2 | 离线环境 KubeSphere/k8s-master | | master-3 | 192.168.10.4 | Kunpeng-920 | Kylin V10 SP2 | 离线环境 KubeSphere/k8s-master | | deploy | 192.168.200.7 | Kunpeng-920 | Kylin V10 SP3 | 联网主机用于制作离线包 |

实战环境涉及软件版本信息

-

服务器芯片:Kunpeng-920

-

操作系统:麒麟V10 SP2/SP3 aarch64

-

Docker: 24.0.7

-

Harbor: v2.7.1

-

KubeSphere:v3.3.1

-

Kubernetes:v1.22.12

-

KubeKey: v2.3.1

本文介绍

本文介绍了如何在 麒麟 V10 aarch64 架构服务器上制品和离线部署 KubeSphere 和 Kubernetes 集群。我们将使用 KubeSphere 开发的 KubeKey 工具实现自动化部署,在三台服务器上实现高可用模式最小化部署 Kubernetes 集群和 KubeSphere。

KubeSphere 和 Kubernetes 在 ARM 架构 和 X86 架构的服务器上部署,最大的区别在于所有服务使用的容器镜像架构类型的不同,KubeSphere 开源版对于 ARM 架构的默认支持可以实现 KubeSphere-Core 功能,即可以实现最小化的 KubeSphere 和完整的 Kubernetes 集群的部署。当启用了 KubeSphere 可插拔组件时,会遇到个别组件部署失败的情况,需要我们手工替换官方或是第三方提供的 ARM 版镜像或是根据官方源码手工构建 ARM 版镜像。如果需要实现开箱即用及更多的技术支持,则需要购买企业版的 KubeSphere。

1.1 确认操作系统配置

在执行下文的任务之前,先确认操作系统相关配置。

-

操作系统类型

[root@localhost ~]# cat /etc/os-releaseNAME="Kylin Linux Advanced Server"VERSION="V10 (Halberd)"ID="kylin"VERSION_ID="V10"PRETTY_NAME="Kylin Linux Advanced Server V10 (Halberd)"ANSI_COLOR="0;31

-

操作系统内核

[root@node1 ~]# uname -rLinux node1 4.19.90-52.22.v2207.ky10.aarch64

- 服务器 CPU 信息

[root@node1 ~]# lscpuArchitecture: aarch64CPU op-mode(s): 64-bitByte Order: Little EndianCPU(s): 32On-line CPU(s) list: 0-31Thread(s) per core: 1Core(s) per socket: 1Socket(s): 32NUMA node(s): 2Vendor ID: HiSiliconModel: 0Model name: Kunpeng-920Stepping: 0x1BogoMIPS: 200.00NUMA node0 CPU(s): 0-15NUMA node1 CPU(s): 16-31Vulnerability Itlb multihit: Not affectedVulnerability L1tf: Not affectedVulnerability Mds: Not affectedVulnerability Meltdown: Not affectedVulnerability Spec store bypass: Not affectedVulnerability Spectre v1: Mitigation; __user pointer sanitizationVulnerability Spectre v2: Not affectedVulnerability Srbds: Not affectedVulnerability Tsx async abort: Not affectedFlags: fp asimd evtstrm aes pmull sha1 sha2 crc32 atomics fphp asimdhp cpuid asimdrdm jscvt fcma dcpop asimddp asimdfhm

- 安装k8s依赖服务

这里使用能联网的 deploy 节点,用来制作离线部署资源包。由于harbor官方不支持arm,先使用在线安装kubesphere,后续根据kubekey生成的文件作为伪制品。故在192.168.200.7服务器以单节点形式部署ks。

以下为多阶段部署,目的是方便制作离线安装包

2.1 部署docker和docker-compose

具体可参考以下文章第一部分 天行1st,公众号:编码如写诗鲲鹏+欧拉部署KubeSphere3.4

这里采用制作好的安装包形式,直接安装,解压后执行其中的install.sh

https://pan.baidu.com/s/1NUYFg3ayp1JHhNdUSY25wQ?pwd=9tek

2.2 部署harbor

上传安装包后解压后执行其中的install.sh

https://pan.baidu.com/s/1fL69nDOG5j92bEk84UQk7g?pwd=uian

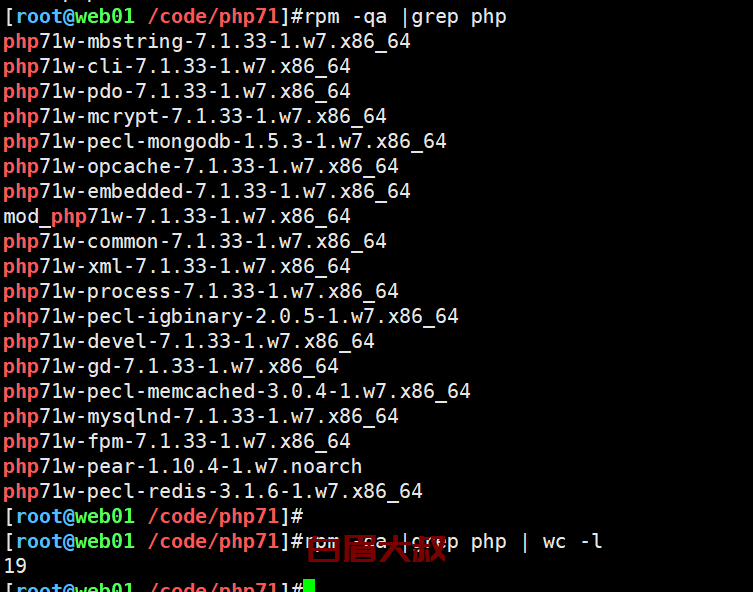

2.3 下载麒麟系统k8s依赖包

mkdir -p /root/kubesphere/k8s-init# 该命令将下载相关依赖到/root/kubesphere/k8s-init目录yum -y install openssl socat conntrack ipset ebtables chrony ipvsadm --downloadonly --downloaddir /root/kubesphere/k8s-init# 编写安装脚本cat install.sh#!/bin/bash#

rpm -ivh *.rpm --force --nodeps

# 打成压缩包,方便离线部署使用tar -czvf k8s-init-Kylin_V10-arm.tar.gz ./k8s-init/*

2.4 下载ks相关镜像

下载kubesphere3.3.1所需要的arm镜像

#!/bin/bash# docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console:v3.3.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager:v3.3.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer:v3.3.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver:v3.3.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/openpitrix-jobs:v3.3.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/alpine:3.14docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.22.12docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.22.12docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.12docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.22.12docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv:3.3.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils:3.3.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics:v2.5.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/fluent-bit:v1.8.11docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader:v0.55.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator:v0.55.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/thanos:v0.25.2docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus:v2.34.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/fluentbit-operator:v0.13.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter:v1.3.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.22.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager:v1.4.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar:v3.2.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator:v1.4.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager:v0.23.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/docker:19.03docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/configmap-reload:v0.5.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller:v4.0.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.8.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/log-sidecar-injector:1.1docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Zdocker pull registry.cn-beijing.aliyuncs.com/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Zdocker pull registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64:1.4docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/redis:5.0.14-alpinedocker pull registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.3docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch:2.6.0docker pull registry.cn-beijing.aliyuncs.com/kubesphereio/busybox:latestdocker pull kubesphere/fluent-bit:v2.0.6

这里使用ks阿里云镜像,其中有些镜像会下载失败,具体可查看运维有术文章。对于下载失败的镜像,可通过本地电脑,直接去hub.docker.com下载。例如: * * * * * * *

docker pull kubesphere/fluent-bit:v2.0.6 --platform arm64#官方ks-console:v3.3.1(arm版)在麒麟中跑不起来,据运维有术介绍,需要使用node14基础镜像。当在鲲鹏服务器准备自己构建时报错淘宝源https过期,使用https://registry.npmmirror.com仍然报错,于是放弃使用该3.3.0镜像,重命名为3.3.1docker pull zl862520682/ks-console:v3.3.0docker tag zl862520682/ks-console:v3.3.0 dockerhub.kubekey.local/kubesphereio/ks-console:v3.3.1## mc和minio也需要重新拉取打tagdocker pull minio/minio:RELEASE.2020-11-25T22-36-25Z-arm64docker tag minio/minio:RELEASE.2020-11-25T22-36-25Z-arm64 dockerhub.kubekey.local/kubesphereio/minio:RELEASE

2.5 重命名镜像

重新给镜像打tag,标记为私有仓库镜像 * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

878 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.27.3 dockerhub.kubekey.local/kubesphereio/kube-controllers:v3.27.3879 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.27.3 dockerhub.kubekey.local/kubesphereio/cni:v3.27.3880 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.27.3 dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.27.3881 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.27.3 dockerhub.kubekey.local/kubesphereio/node:v3.27.3882 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console:v3.3.1 dockerhub.kubekey.local/kubesphereio/ks-console:v3.3.1883 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/alpine:3.14 dockerhub.kubekey.local/kubesphereio/alpine:3.14884 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.22.20 dockerhub.kubekey.local/kubesphereio/k8s-dns-node-cache:1.22.20885 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager:v3.3.1 dockerhub.kubekey.local/kubesphereio/ks-controller-manager:v3.3.1886 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer:v3.3.1 dockerhub.kubekey.local/kubesphereio/ks-installer:v3.3.1887 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver:v3.3.1 dockerhub.kubekey.local/kubesphereio/ks-apiserver:v3.3.1888 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/openpitrix-jobs:v3.3.1 dockerhub.kubekey.local/kubesphereio/openpitrix-jobs:v3.3.1889 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.22.12 dockerhub.kubekey.local/kubesphereio/kube-apiserver:v1.22.12890 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.12 dockerhub.kubekey.local/kubesphereio/891 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.22.12 dockerhub.kubekey.local/kubesphereio/kube-controller-manager:v1.22.12892 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.22.12 dockerhub.kubekey.local/kubesphereio/kube-scheduler:v1.22.12893 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv:3.3.0 dockerhub.kubekey.local/kubesphereio/provisioner-localpv:3.3.0894 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils:3.3.0 dockerhub.kubekey.local/kubesphereio/linux-utils:3.3.0895 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics:v2.5.0 dockerhub.kubekey.local/kubesphereio/kube-state-metrics:v2.5.0896 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/fluent-bit:v1.8.11 dockerhub.kubekey.local/kubesphereio/fluent-bit:v1.8.11897 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader:v0.55.1 dockerhub.kubekey.local/kubesphereio/prometheus-config-reloader:v0.55.1898 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator:v0.55.1 dockerhub.kubekey.local/kubesphereio/prometheus-operator:v0.55.1899 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/thanos:v0.25.2 dockerhub.kubekey.local/kubesphereio/thanos:v0.25.2900 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus:v2.34.0 dockerhub.kubekey.local/kubesphereio/prometheus:v2.34.0901 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/fluentbit-operator:v0.13.0 dockerhub.kubekey.local/kubesphereio/fluentbit-operator:v0.13.0903 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter:v1.3.1 dockerhub.kubekey.local/kubesphereio/node-exporter:v1.3.1904 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.22.0 dockerhub.kubekey.local/kubesphereio/kubectl:v1.22.0905 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager:v1.4.0 dockerhub.kubekey.local/kubesphereio/notification-manager:v1.4.0906 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar:v3.2.0 dockerhub.kubekey.local/kubesphereio/notification-tenant-sidecar:v3.2.0907 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator:v1.4.0 dockerhub.kubekey.local/kubesphereio/notification-manager-operator:v1.4.0908 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager:v0.23.0 dockerhub.kubekey.local/kubesphereio/alertmanager:v0.23.0909 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0 dockerhub.kubekey.local/kubesphereio/kube-rbac-proxy:v0.11.0910 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/docker:19.03 dockerhub.kubekey.local/kubesphereio/docker:19.03911 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/metrics-server:v0.4.2 dockerhub.kubekey.local/kubesphereio/metrics-server:v0.4.2912 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5 dockerhub.kubekey.local/kubesphereio/pause:3.5913 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/configmap-reload:v0.5.0 dockerhub.kubekey.local/kubesphereio/configmap-reload:v0.5.0914 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller:v4.0.0 dockerhub.kubekey.local/kubesphereio/snapshot-controller:v4.0.0915 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z dockerhub.kubekey.local/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z916 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z dockerhub.kubekey.local/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z917 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.8.0 dockerhub.kubekey.local/kubesphereio/kube-rbac-proxy:v0.8.0918 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0 dockerhub.kubekey.local/kubesphereio/coredns:1.8.0919 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/log-sidecar-injector:1.1 dockerhub.kubekey.local/kubesphereio/log-sidecar-injector:1.1921 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64:1.4 dockerhub.kubekey.local/kubesphereio/defaultbackend-amd64:1.4922 docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.12 dockerhub.kubekey.local/kubesphereio/kube-proxy:v1.22.12docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.22.20 dockerhub.kubekey.local/kubesphereio/k8s-dns-node-cache:1.15.12docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2 dockerhub.kubekey.local/kubesphereio/kube-controllers:v3.23.2docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2 dockerhub.kubekey.local/kubesphereio/cni:v3.23.2docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2 dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.23.2docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2 dockerhub.kubekey.local/kubesphereio/node:v3.23.2docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch:2.6.0 dockerhub.kubekey.local/kubesphereio/opensearch:2.6.0docker tag registry.cn-beijing.aliyuncs.com/kubesphereio/busybox:latest dockerhub.kubekey.local/kubesphereio/busybox:latestdocker tag kubesphere/fluent-bit:v2.0.6 dockerhub.kubekey.local/kubesphereio/fluent-bit:v2.0.6 # 也可重命名为v1.8.11,可省下后续修改fluent的yaml,这里采用后修改方式

2.6 推送至harbor私有仓库

#!/bin/bash#

docker load < ks3.3.1-images.tar.gz

docker login -u admin -p Harbor12345 dockerhub.kubekey.local

docker push dockerhub.kubekey.local/kubesphereio/ks-console:v3.3.1docker push dockerhub.kubekey.local/kubesphereio/ks-controller-manager:v3.3.1docker push dockerhub.kubekey.local/kubesphereio/ks-installer:v3.3.1docker push dockerhub.kubekey.local/kubesphereio/ks-apiserver:v3.3.1docker push dockerhub.kubekey.local/kubesphereio/openpitrix-jobs:v3.3.1docker push dockerhub.kubekey.local/kubesphereio/alpine:3.14docker push dockerhub.kubekey.local/kubesphereio/kube-apiserver:v1.22.12docker push dockerhub.kubekey.local/kubesphereio/kube-scheduler:v1.22.12docker push dockerhub.kubekey.local/kubesphereio/kube-proxy:v1.22.12docker push dockerhub.kubekey.local/kubesphereio/kube-controller-manager:v1.22.12docker push dockerhub.kubekey.local/kubesphereio/provisioner-localpv:3.3.0docker push dockerhub.kubekey.local/kubesphereio/linux-utils:3.3.0docker push dockerhub.kubekey.local/kubesphereio/kube-controllers:v3.23.2docker push dockerhub.kubekey.local/kubesphereio/cni:v3.23.2docker push dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.23.2docker push dockerhub.kubekey.local/kubesphereio/node:v3.23.2docker push dockerhub.kubekey.local/kubesphereio/kube-state-metrics:v2.5.0docker push dockerhub.kubekey.local/kubesphereio/fluent-bit:v1.8.11docker push dockerhub.kubekey.local/kubesphereio/prometheus-config-reloader:v0.55.1docker push dockerhub.kubekey.local/kubesphereio/prometheus-operator:v0.55.1docker push dockerhub.kubekey.local/kubesphereio/thanos:v0.25.2docker push dockerhub.kubekey.local/kubesphereio/prometheus:v2.34.0docker push dockerhub.kubekey.local/kubesphereio/fluentbit-operator:v0.13.0docker push dockerhub.kubekey.local/kubesphereio/node-exporter:v1.3.1docker push dockerhub.kubekey.local/kubesphereio/kubectl:v1.22.0docker push dockerhub.kubekey.local/kubesphereio/notification-manager:v1.4.0docker push dockerhub.kubekey.local/kubesphereio/notification-tenant-sidecar:v3.2.0docker push dockerhub.kubekey.local/kubesphereio/notification-manager-operator:v1.4.0docker push dockerhub.kubekey.local/kubesphereio/alertmanager:v0.23.0docker push dockerhub.kubekey.local/kubesphereio/kube-rbac-proxy:v0.11.0docker push dockerhub.kubekey.local/kubesphereio/docker:19.03docker push dockerhub.kubekey.local/kubesphereio/pause:3.5docker push dockerhub.kubekey.local/kubesphereio/configmap-reload:v0.5.0docker push dockerhub.kubekey.local/kubesphereio/snapshot-controller:v4.0.0docker push dockerhub.kubekey.local/kubesphereio/kube-rbac-proxy:v0.8.0docker push dockerhub.kubekey.local/kubesphereio/coredns:1.8.0docker push dockerhub.kubekey.local/kubesphereio/log-sidecar-injector:1.1docker push dockerhub.kubekey.local/kubesphereio/k8s-dns-node-cache:1.15.12docker push dockerhub.kubekey.local/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Zdocker push dockerhub.kubekey.local/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Zdocker push dockerhub.kubekey.local/kubesphereio/defaultbackend-amd64:1.4docker push dockerhub.kubekey.local/kubesphereio/redis:5.0.14-alpinedocker push dockerhub.kubekey.local/kubesphereio/haproxy:2.3docker push dockerhub.kubekey.local/kubesphereio/opensearch:2.6.0docker push dockerhub.kubekey.local/kubesphereio/busybox:latestdocker push dockerhub.kubekey.local/kubesphereio/fluent-bit:v2.0.6

- 使用kk部署kubesphere

3.1 移除麒麟系统自带的podman

podman是麒麟系统自带的容器引擎,为避免后续与docker冲突,直接卸载。否则后续coredns/nodelocaldns也会受影响无法启动以及各种docker权限问题。 *

yum remove podman

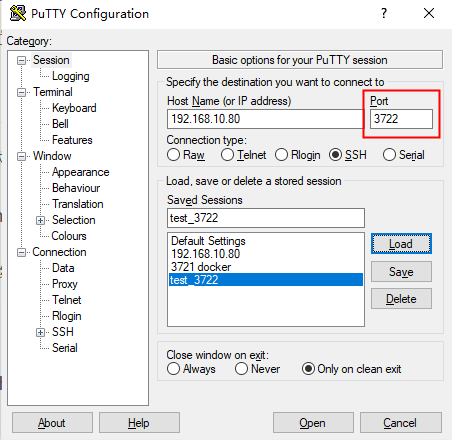

3.2 下载kubekey

下载 kubekey-v2.3.1-linux-arm64.tar. g z 。具体 KubeKey 版本号可以在 KubeKey 发行页面([1]) 查看。

-

方式一

cd ~mkdir kubespherecd kubesphere/

# 选择中文区下载(访问 GitHub 受限时使用)export KKZONE=cn

# 执行下载命令,获取最新版的 kk(受限于网络,有时需要执行多次)curl -sfL https://get-kk.kubesphere.io/v2.3.1/kubekey-v2.3.1-linux-arm64.tar.gz | tar xzf -

- 方式二

使用本地电脑,直接去github下载 Releases · kubesphere/kubekey

上传至服务器/root/kubesphere目录解压 *

tar zxf kubekey-v2.3.1-linux-arm64.tar.gz

3.3 生成集群创建配置文件

创建集群配置文件,本示例中,选择 KubeSphere v3.3.1 和 Kubernetes v1.22.12。 *

./kk create config -f kubesphere-v331-v12212.yaml --with-kubernetes v1.22.12 --with-kubesphere v3.3.1

命令执行成功后,在当前目录会生成文件名为 kubesphere-v331-v12212.yaml 的配置文件。

注意: 生成的默认配置文件内容较多,这里就不做过多展示了,更多详细的配置参数请参考 官方配置示例。

本文示例采用 3 个节点同时作为 control-plane、etcd 节点和 worker 节点。

编辑配置文件 kubesphere-v331-v12212.yaml,主要修改 kind: Cluster 和 kind: ClusterConfiguration 两小节的相关配置

修改 kind: Cluster 小节中 hosts 和 roleGroups 等信息,修改说明如下。

-

hosts:指定节点的 IP、ssh 用户、ssh 密码、ssh 端口。特别注意 **:** 一定要手工指定 arch : arm64,否则部署的时候会安装 X86 架构的软件包。

-

roleGroups:指定 3 个 etcd、control-plane 节点,复用相同的机器作为 3 个 worker 节点。

-

internalLoadbalancer:启用内置的 HAProxy 负载均衡器。

-

domain:自定义了一个 opsman.top

-

containerManager:使用了 containerd

-

storage.openebs.basePath:新增配置 ,指定默认存储路径为 /data/openebs/local

修改后的示例如下: * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

apiVersion: kubekey.kubesphere.io/v1alpha2kind: Clustermetadata: name: samplespec: hosts: - {name: node1, address: 192.168.200.7, internalAddress: 192.168.200.7, user: root, password: "123456", arch: arm64} roleGroups: etcd: - node1 control-plane: - node1 worker: - node1 registry: - node1 controlPlaneEndpoint: ## Internal loadbalancer for apiservers # internalLoadbalancer: haproxy

domain: lb.kubesphere.local address: "" port: 6443 kubernetes: version: v1.22.12 clusterName: cluster.local autoRenewCerts: true containerManager: docker etcd: type: kubekey network: plugin: calico kubePodsCIDR: 10.233.64.0/18 kubeServiceCIDR: 10.233.0.0/18 ## multus support. https://github.com/k8snetworkplumbingwg/multus-cni multusCNI: enabled: false registry: type: harbor auths: "dockerhub.kubekey.local": username: admin password: Harbor12345 privateRegistry: "dockerhub.kubekey.local" namespaceOverride: "kubesphereio" registryMirrors: [] insecureRegistries: [] addons: []

---apiVersion: installer.kubesphere.io/v1alpha1kind: ClusterConfigurationmetadata: name: ks-installer namespace: kubesphere-system labels: version: v3.3.1spec: persistence: storageClass: "" authentication: jwtSecret: "" zone: "" local_registry: "" namespace_override: "" # dev_tag: "" etcd: monitoring: true endpointIps: localhost port: 2379 tlsEnable: true common: core: console: enableMultiLogin: true port: 30880 type: NodePort # apiserver: # resources: {} # controllerManager: # resources: {} redis: enabled: false volumeSize: 2Gi openldap: enabled: false volumeSize: 2Gi minio: volumeSize: 20Gi monitoring: # type: external endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 GPUMonitoring: enabled: false gpu: kinds: - resourceName: "nvidia.com/gpu" resourceType: "GPU" default: true es: # master: # volumeSize: 4Gi # replicas: 1 # resources: {} # data: # volumeSize: 20Gi # replicas: 1 # resources: {} logMaxAge: 7 elkPrefix: logstash basicAuth: enabled: false username: "" password: "" externalElasticsearchHost: "" externalElasticsearchPort: "" alerting: enabled: true # thanosruler: # replicas: 1 # resources: {} auditing: enabled: false # operator: # resources: {} # webhook: # resources: {} devops: enabled: false # resources: {} jenkinsMemoryLim: 8Gi jenkinsMemoryReq: 4Gi jenkinsVolumeSize: 8Gi events: enabled: false # operator: # resources: {} # exporter: # resources: {} # ruler: # enabled: true # replicas: 2 # resources: {} logging: enabled: true logsidecar: enabled: true replicas: 2 # resources: {} metrics_server: enabled: false monitoring: storageClass: "" node_exporter: port: 9100 # resources: {} # kube_rbac_proxy: # resources: {} # kube_state_metrics: # resources: {} # prometheus: # replicas: 1 # volumeSize: 20Gi # resources: {} # operator: # resources: {} # alertmanager: # replicas: 1 # resources: {} # notification_manager: # resources: {} # operator: # resources: {} # proxy: # resources: {} gpu: nvidia_dcgm_exporter: enabled: false # resources: {} multicluster: clusterRole: none network: networkpolicy: enabled: false ippool: type: none topology: type: none openpitrix: store: enabled: true servicemesh: enabled: false istio: components: ingressGateways: - name: istio-ingressgateway enabled: false cni: enabled: false edgeruntime: enabled: false kubeedge: enabled: false cloudCore: cloudHub: advertiseAddress: - "" service: cloudhubNodePort: "30000" cloudhubQuicNodePort: "30001" cloudhubHttpsNodePort: "30002" cloudstreamNodePort: "30003" tunnelNodePort: "30004" # resources: {} # hostNetWork: false iptables-manager: enabled: true mode: "external" # resources: {} # edgeService: # resources: {} terminal: timeout: 600

3.4 执行安装

./kk create cluster -f kubesphere-v331-v122123.yaml

此节点之所以安装kubesphere是因为kk在安装过程中会产生kubekey文件夹并将k8s所需要的依赖都下载到kubekey目录。后续我们离线安装主要使用kubekey文件夹,配合一下脚本代替之前的制品。

- 制作离线部署资源

4.1 导出k8s基础依赖包

yum -y install openssl socat conntrack ipset ebtables chrony ipvsadm --downloadonly --downloaddir /root/kubesphere/k8s-init# 打成压缩包tar -czvf k8s-init-Kylin_V10-arm.tar.gz ./k8s-init/*

4.2 导出ks需要的镜像

导出ks相关的镜像至ks3.3.1-images.tar *

docker save -o ks3.3.1-images.tar dockerhub.kubekey.local/kubesphereio/kube-controllers:v3.27.3 dockerhub.kubekey.local/kubesphereio/cni:v3.27.3 dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.27.3 dockerhub.kubekey.local/kubesphereio/node:v3.27.3 dockerhub.kubekey.local/kubesphereio/ks-console:v3.3.1 dockerhub.kubekey.local/kubesphereio/alpine:3.14 dockerhub.kubekey.local/kubesphereio/k8s-dns-node-cache:1.22.20 dockerhub.kubekey.local/kubesphereio/ks-controller-manager:v3.3.1 dockerhub.kubekey.local/kubesphereio/ks-installer:v3.3.1 dockerhub.kubekey.local/kubesphereio/ks-apiserver:v3.3.1 dockerhub.kubekey.local/kubesphereio/openpitrix-jobs:v3.3.1 dockerhub.kubekey.local/kubesphereio/kube-apiserver:v1.22.12 dockerhub.kubekey.local/kubesphereio/kube-proxy:v1.22.12 dockerhub.kubekey.local/kubesphereio/kube-controller-manager:v1.22.12 dockerhub.kubekey.local/kubesphereio/kube-scheduler:v1.22.12 dockerhub.kubekey.local/kubesphereio/provisioner-localpv:3.3.0 dockerhub.kubekey.local/kubesphereio/linux-utils:3.3.0 dockerhub.kubekey.local/kubesphereio/kube-state-metrics:v2.5.0 dockerhub.kubekey.local/kubesphereio/fluent-bit:v2.0.6 dockerhub.kubekey.local/kubesphereio/prometheus-config-reloader:v0.55.1 dockerhub.kubekey.local/kubesphereio/prometheus-operator:v0.55.1 dockerhub.kubekey.local/kubesphereio/thanos:v0.25.2 dockerhub.kubekey.local/kubesphereio/prometheus:v2.34.0 dockerhub.kubekey.local/kubesphereio/fluentbit-operator:v0.13.0 dockerhub.kubekey.local/kubesphereio/node-exporter:v1.3.1 dockerhub.kubekey.local/kubesphereio/kubectl:v1.22.0 dockerhub.kubekey.local/kubesphereio/notification-manager:v1.4.0 dockerhub.kubekey.local/kubesphereio/notification-tenant-sidecar:v3.2.0 dockerhub.kubekey.local/kubesphereio/notification-manager-operator:v1.4.0 dockerhub.kubekey.local/kubesphereio/alertmanager:v0.23.0 dockerhub.kubekey.local/kubesphereio/kube-rbac-proxy:v0.11.0 dockerhub.kubekey.local/kubesphereio/docker:19.03 dockerhub.kubekey.local/kubesphereio/metrics-server:v0.4.2 dockerhub.kubekey.local/kubesphereio/pause:3.5 dockerhub.kubekey.local/kubesphereio/configmap-reload:v0.5.0 dockerhub.kubekey.local/kubesphereio/snapshot-controller:v4.0.0 dockerhub.kubekey.local/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z dockerhub.kubekey.local/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z dockerhub.kubekey.local/kubesphereio/kube-rbac-proxy:v0.8.0 dockerhub.kubekey.local/kubesphereio/coredns:1.8.0 dockerhub.kubekey.local/kubesphereio/defaultbackend-amd64:1.4 dockerhub.kubekey.local/kubesphereio/redis:5.0.14-alpine dockerhub.kubekey.local/kubesphereio/k8s-dns-node-cache:1.15.12 dockerhub.kubekey.local/kubesphereio/node:v3.23.2 dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.23.2 dockerhub.kubekey.local/kubesphereio/cni:v3.23.2 dockerhub.kubekey.local/kubesphereio/kube-controllers:v3.23.2 dockerhub.kubekey.local/kubesphereio/haproxy:2.3 dockerhub.kubekey.local/kubesphereio/busybox:latest dockerhub.kubekey.local/kubesphereio/opensearch:2.6.0 dockerhub.kubekey.local/kubesphereio/fluent-bit:v2.0.6

压缩 *

gzip ks3.3.1-images.tar

4.3 导出kubesphere文件夹

[root@node1 ~]# cd /root/kubesphere[root@node1 kubesphere]# lscreate_project_harbor.sh docker-24.0.7-arm.tar.gz fluent-bit-daemonset.yaml harbor-arm.tar.gz harbor.tar.gz install.sh k8s-init-Kylin_V10-arm.tar.gz ks3.3.1-images.tar.gz ks3.3.1-offline push-images.sh

tar -czvf kubeshpere.tar.gz ./kubesphere/*

编写install.sh用于后续一键离线安装kk * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

#!/usr/bin/env bashread -p "请先修改机器配置文件ks3.3.1-offline/kubesphere-v331-v12212.yaml中相关IP地址,是否已修改(yes/no)" B do_k8s_init(){ echo "--------开始进行依赖包初始化------" yum remove podman -y tar zxf k8s-init-Kylin_V10-arm.tar.gz cd k8s-init && ./install.sh cd - rm -rf k8s-init}

install_docker(){ echo "--------开始安装docker--------" tar zxf docker-24.0.7-arm.tar.gz cd docker && ./install.sh cd -

}install_harbor(){ echo "-------开始安装harbor----------" tar zxf harbor-arm.tar.gz cd harbor && ./install.sh cd - echo "--------开始推送镜像----------" source create_project_harbor.sh source push-images.sh echo "--------镜像推送完成--------"}install_ks(){ echo "--------开始安装kubesphere--------"# tar zxf ks3.3.1-offline.tar.gz cd ks3.3.1-offline && ./install.sh}

if [ "$B" = "yes" ] || [ "$B" = "y" ]; then do_k8s_init install_docker install_harbor install_kselse echo "请先配置集群配置文件" exit 1fi

- 离线环境安装k8s和kubesphere

5.1 卸载podman和安装k8s依赖

所有节点都需要操作,

上传k8s-init-Kylin_V10-arm.tar.gz并解压后执行install.sh,如果单节点离线部署可直接使用下一步。 *

yum remove podman -y

5.2 安装ks集群

上传kubeshpere.tar.gz并解压,修改./kubesphere/ks3.3.1-offline/kubesphere-v331-v12212.yaml集群配置文件中相关ip,密码等信息。修改后执行install.sh,等待十分钟左右可看到如下消息: * * * * * * * * * * * * * * * * * * * * * * * * * * * *

**************************************************Waiting for all tasks to be completed ...task alerting status is successful (1/6)task network status is successful (2/6)task multicluster status is successful (3/6)task openpitrix status is successful (4/6)task logging status is successful (5/6)task monitoring status is successful (6/6)**************************************************Collecting installation results ...######################################################## Welcome to KubeSphere! ########################################################

Console: http://192.168.10.2:30880Account: adminPassword: P@88w0rdNOTES: 1. After you log into the console, please check the monitoring status of service components in "Cluster Management". If any service is not ready, please wait patiently until all components are up and running. 2. Please change the default password after login.

#####################################################https://kubesphere.io 2024-07-03 11:10:11#####################################################

5.3 其他修改

由于开启了日志功能,arm-麒麟版本fluent-bit一直报错,需更改为2.0.6 * *

kubectl edit daemonsets fluent-bit -n kubesphere-logging-system#修改其中fluent-bit版本1.8.11为2.0.6

如果不需要日志,可以修改ks创建集群配置文件不安装log插件,镜像也可以更加简化

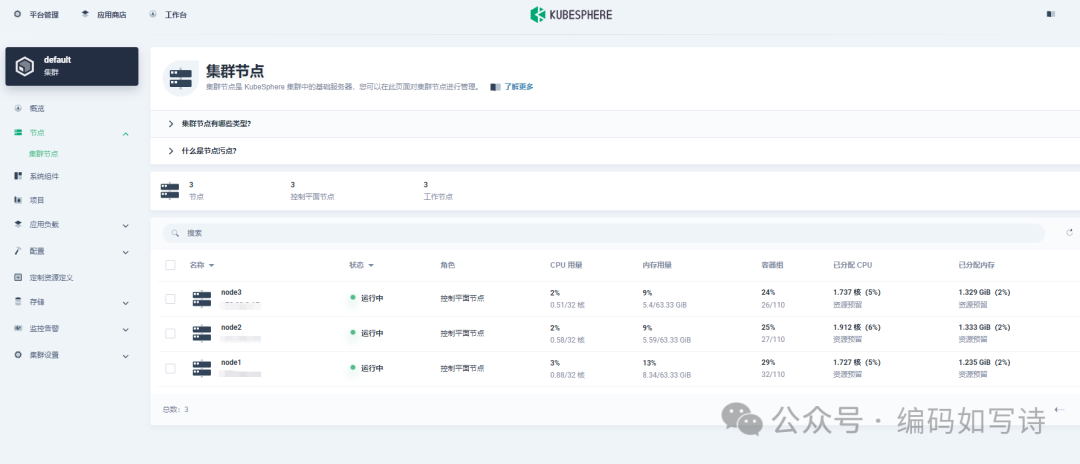

6.测试查看

6.1 验证集群状态

[root@node1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSIONnode1 Ready control-plane,master,worker 25h v1.22.12node2 Ready control-plane,master,worker 25h v1.22.12node3 Ready control-plane,master,worker 25h v1.22.12

页面查看

7.总结

本文主要实战演示了ARM 版 麒麟 V10服务器通过在线环境部署k8s和kubesphere,并将基础依赖,需要的docker镜像和harbor,以及kubekey部署ks下载的各类包一起打包。通过shell脚本编写简单的部署过程,实现离线环境安装k8s和kubesphere。

离线安装主要知识点

-

卸载podman

-

安装k8s依赖包

-

安装Docker

-

安装harbor

-

将k8s和ks需要的镜像推送到harbor

-

使用kk部署集群

51工具盒子

51工具盒子